cf-for-k8s pipeline for concourse-ci Concourse CI

this is a short run tru my cf-for-k8s deployment on azurestack on AKS. it will be updated continously. to understand the scripts i use, the the included links :-)

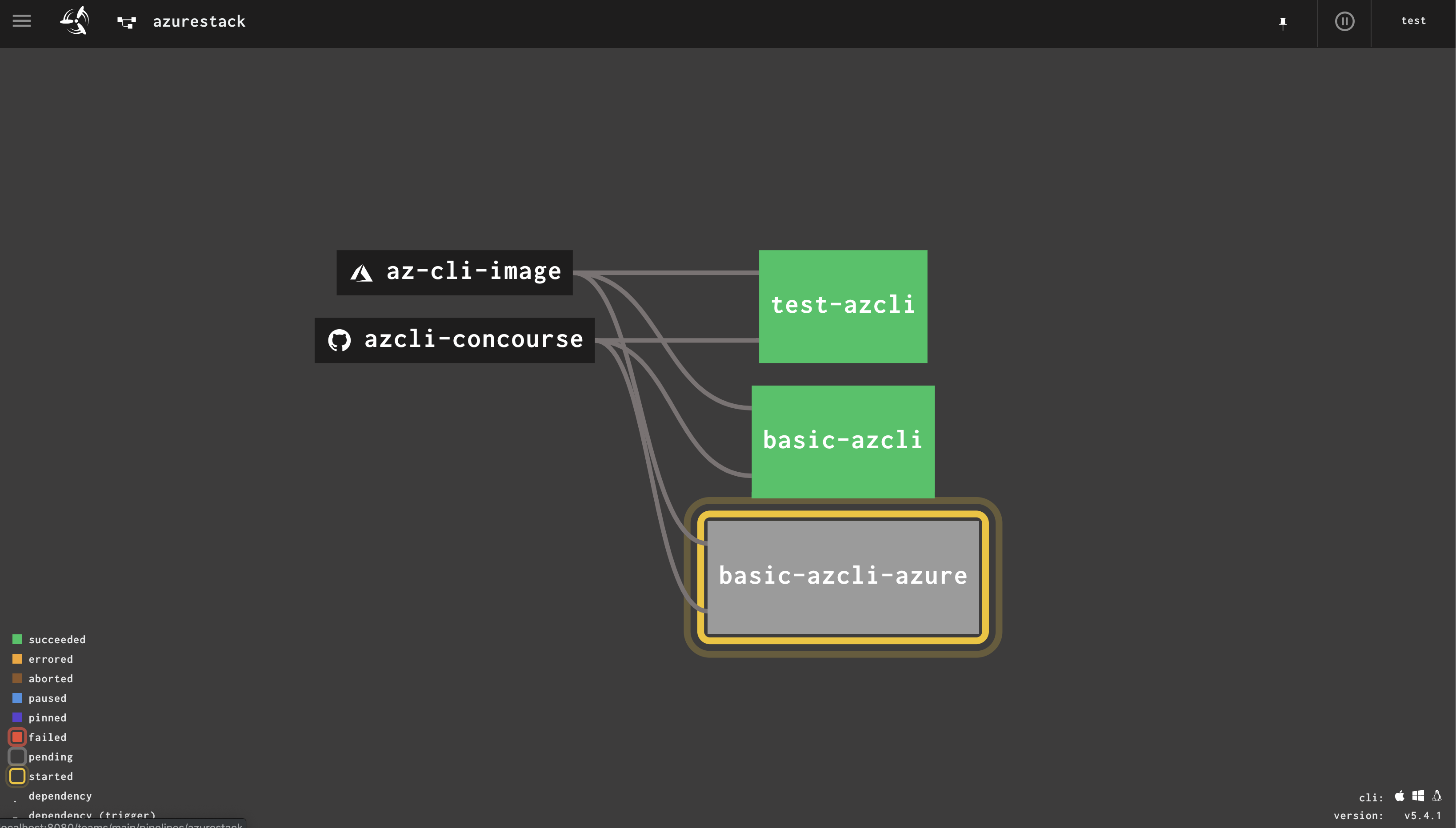

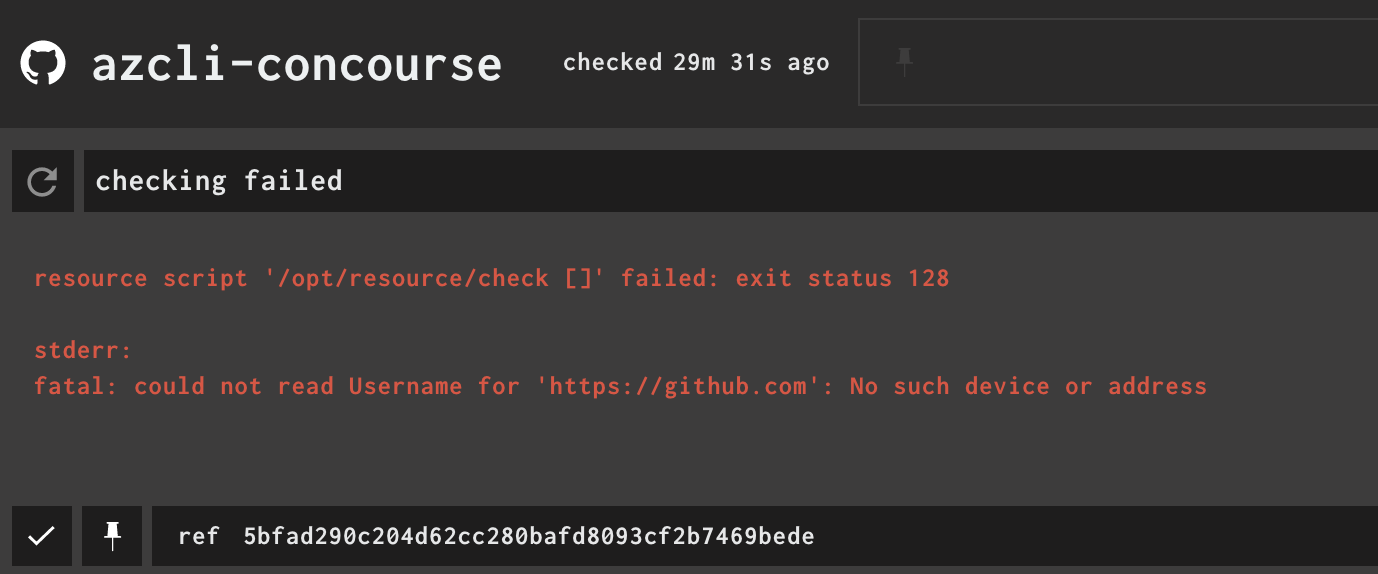

Before getting started: cf-for-k8s installation is pretty straight forward. In this example i am using concourse-fi Concourse CI, and the dployment scripts are custom tasks based out of my github repo azs-concourse, where the pipeline used is platform automation

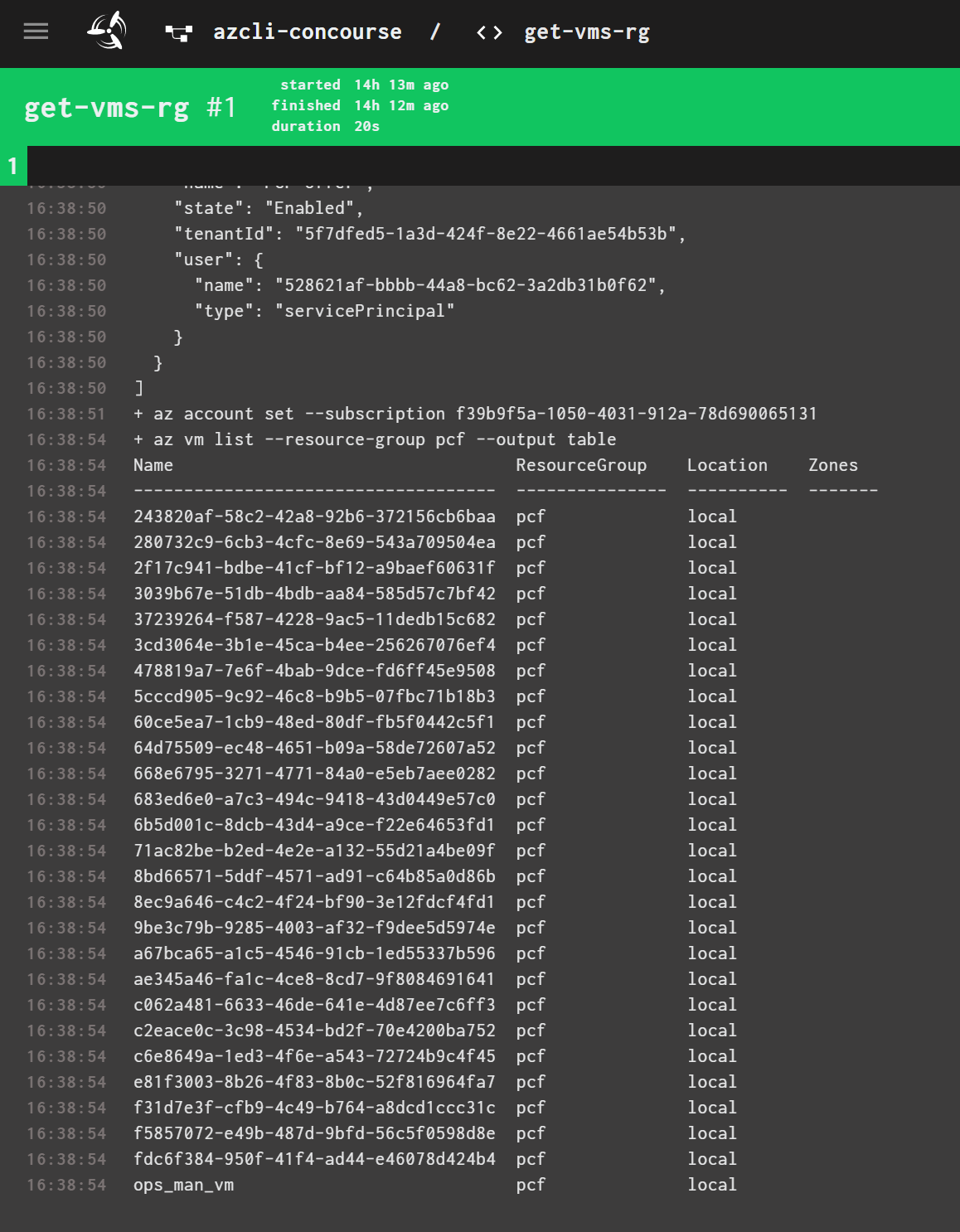

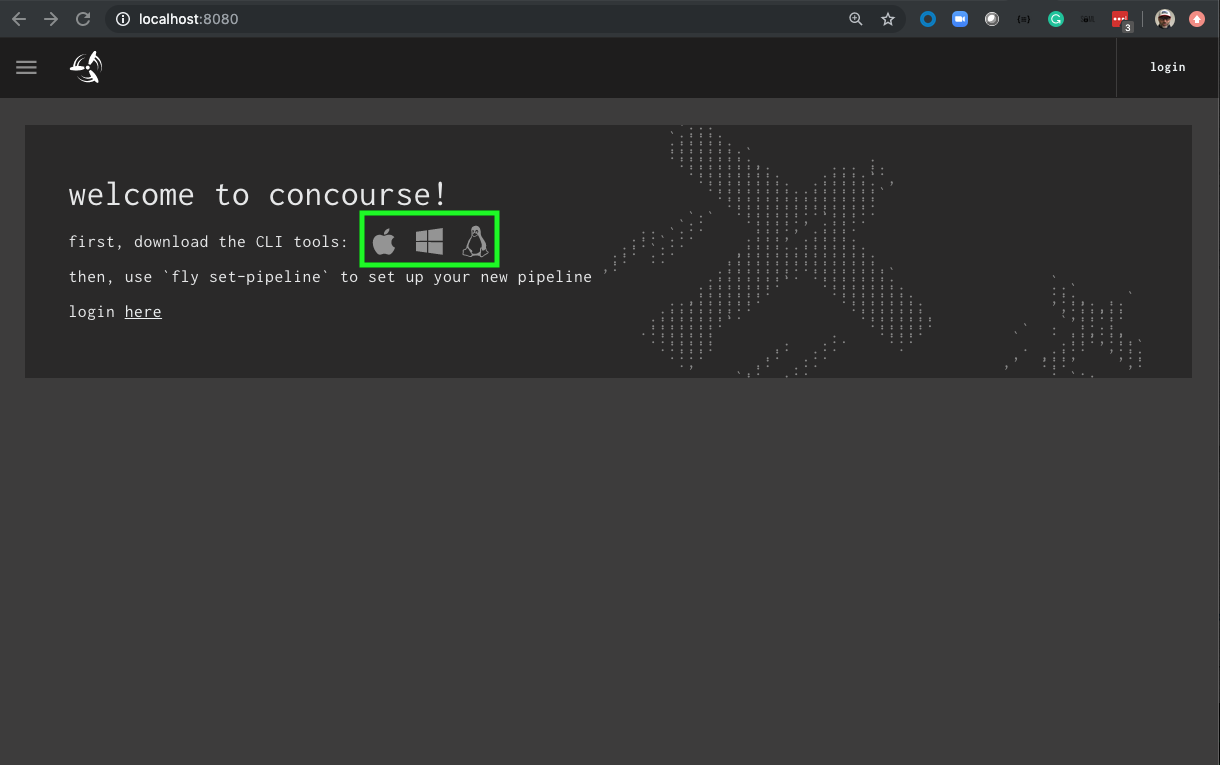

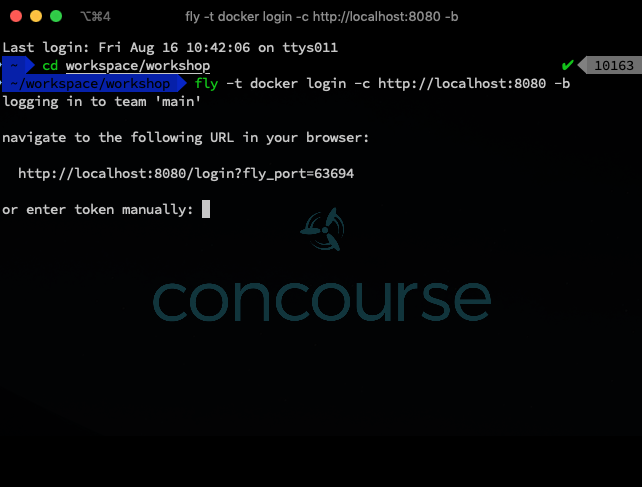

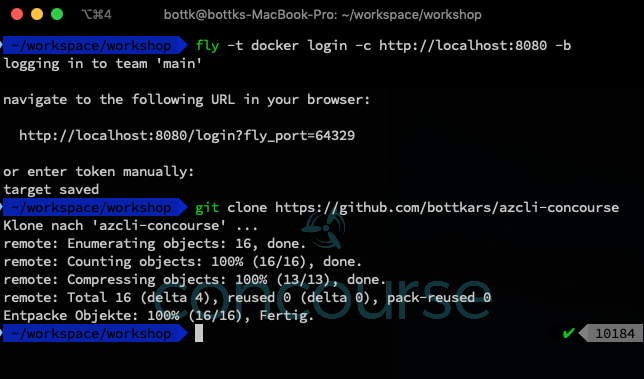

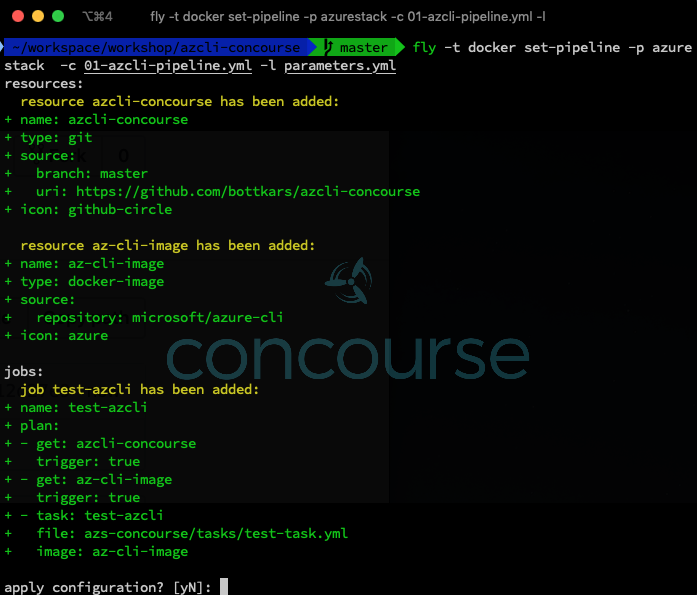

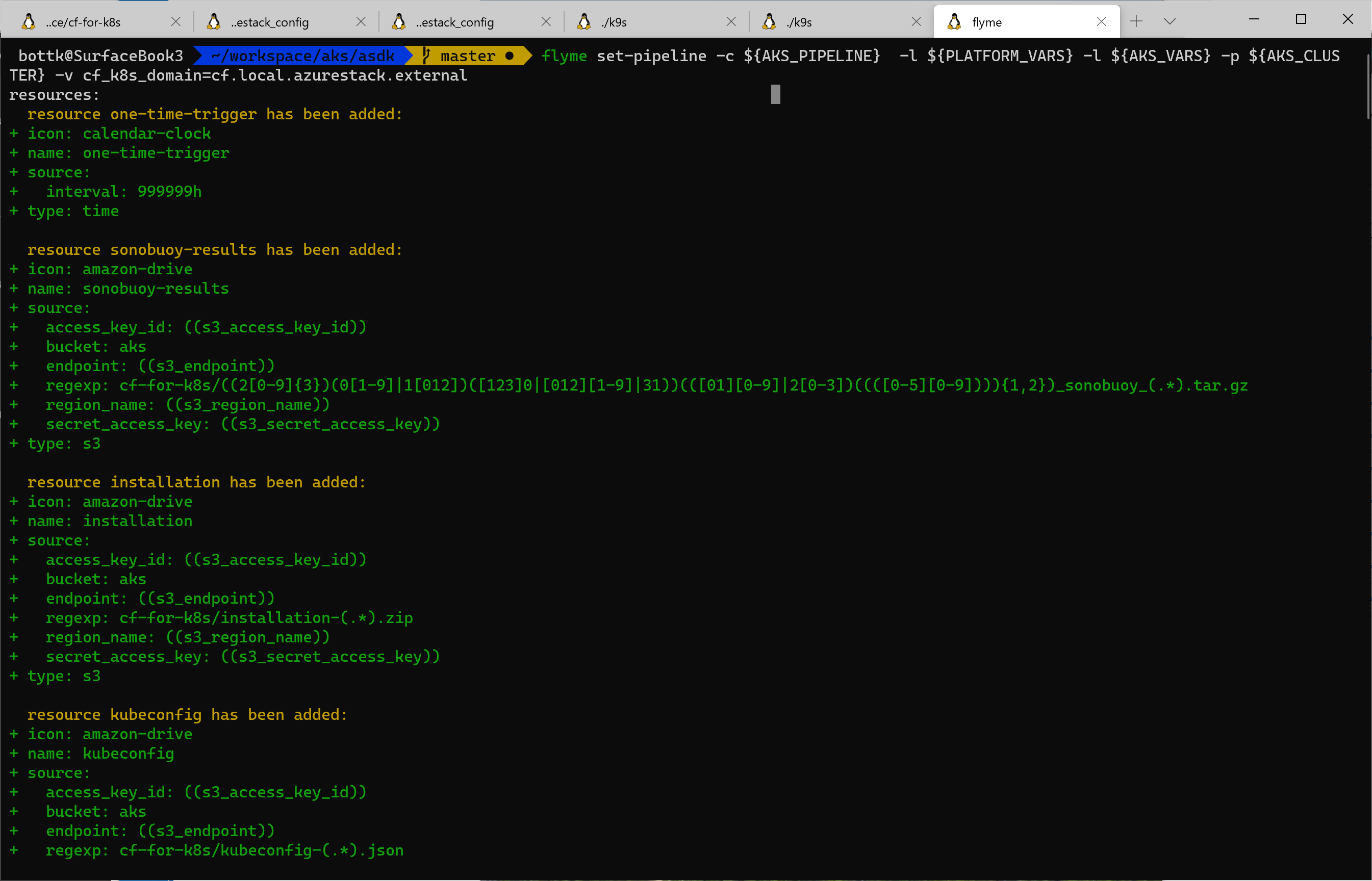

So we actually start with setting the Pipeline:

fly -t concourse_target set-pipeline -c ${AKS_PIPELINE} -l ${PLATFORM_VARS} -l ${AKS_VARS} -p ${AKS_CLUSTER} -v cf_k8s_domain=cf.local.azurestack.external

in the above call, the following aliases / variables are used:

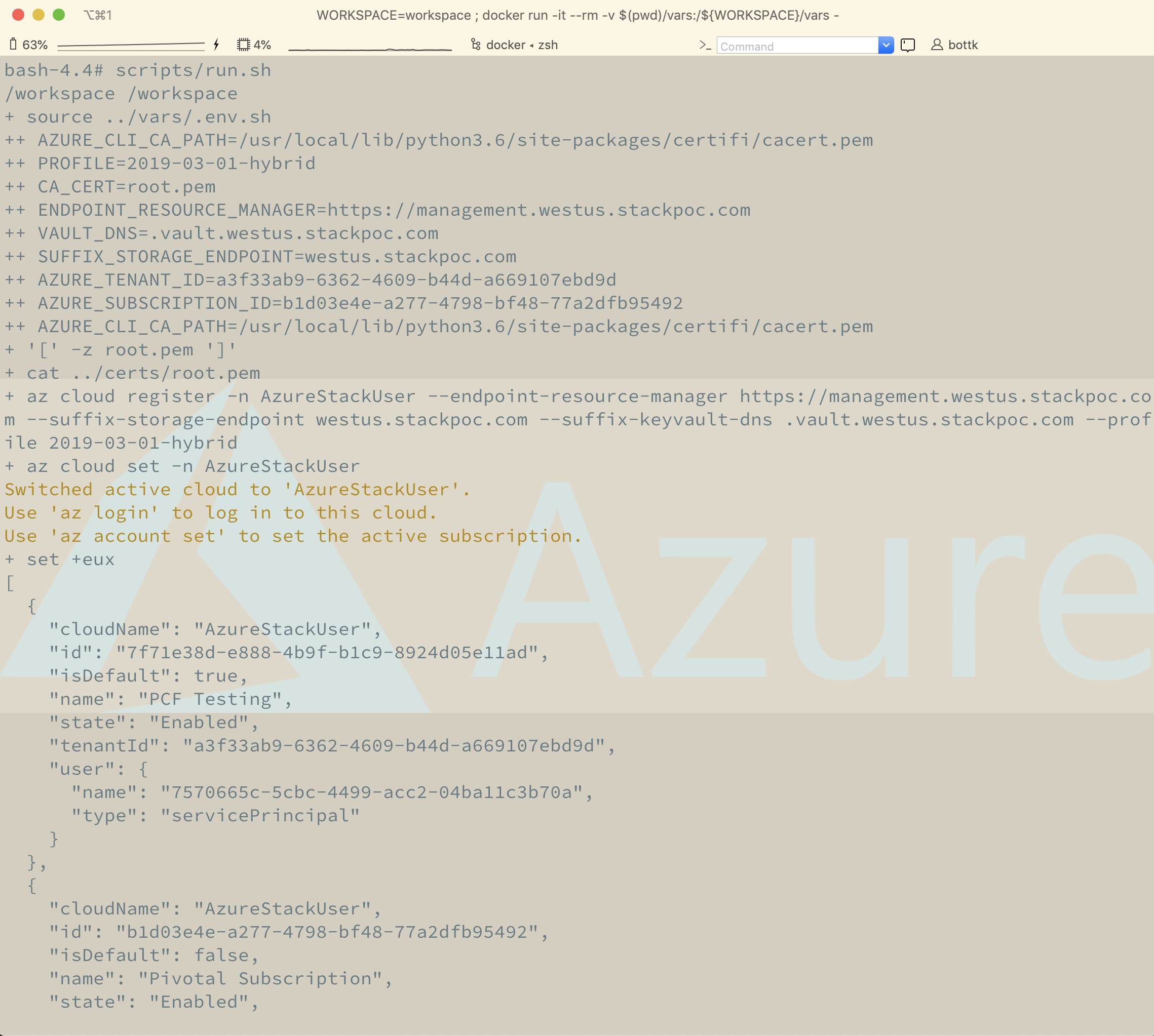

AKS_PIPELINE: is the pipeline file PLATFORM_VARS: Variables containing essential, pipeline independent Environment Variable, e.G. AzureStack Endpoints ( leading with AZURE_) and general var´s this must resolve:

azure_env: &azure_env

PROFILE: ((azs.arm_profile))

CA_CERT: ((azs_ca.certificate))

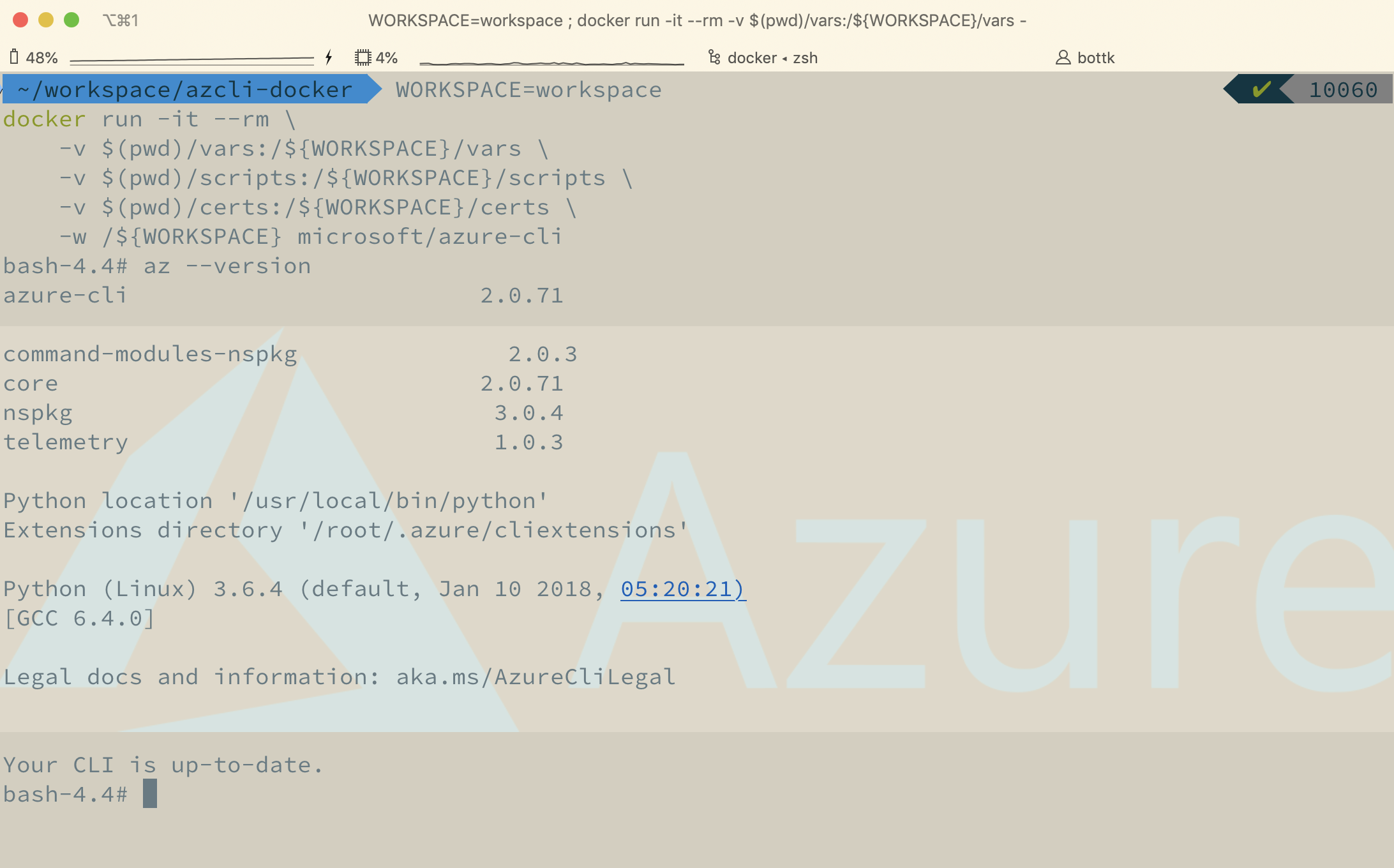

AZURE_CLI_CA_PATH: /opt/az/lib/python3.6/site-packages/certifi/cacert.pem

ENDPOINT_RESOURCE_MANAGER: ((endpoint-resource-manager))

VAULT_DNS: ((azs.vault_dns))

SUFFIX_STORAGE_ENDPOINT: ((azs.suffix_storage_endpoint))

AZURE_TENANT_ID: ((tenant_id))

AZURE_CLIENT_ID: ((client_id))

AZURE_CLIENT_SECRET: ((client_secret))

AZURE_SUBSCRIPTION_ID: ((subscription_id))

RESOURCE_GROUP: ((aks.resource_group))

LOCATION: ((azs.azurestack_region))

AKS_VARS : essentially, vars to control the AKS Engine ( cluster, size etc.. ) Example AKS_VARS:

azs_concourse_branch: tanzu

aks:

team: aks

bucket: aks

resource_group: cf-for-k8s

orchestrator_release: 1.15

orchestrator_version: 1.15.4

orchestrator_version_update: 1.16.1

engine_tagfilter: "v0.43.([1])"

master:

dns_prefix: cf-for-k8s

vmsize: Standard_D2_v2

node_count: 3 # 1, 3 or 5, at least 3 for upgradeable

distro: aks-ubuntu-16.04

agent:

0:

vmsize: Standard_D3_v2

node_count: 3

distro: aks-ubuntu-16.04

new_node_count: 6

pool_name: linuxpool

ostype: Linux

ssh_public_key: ssh-rsa AAAAB...

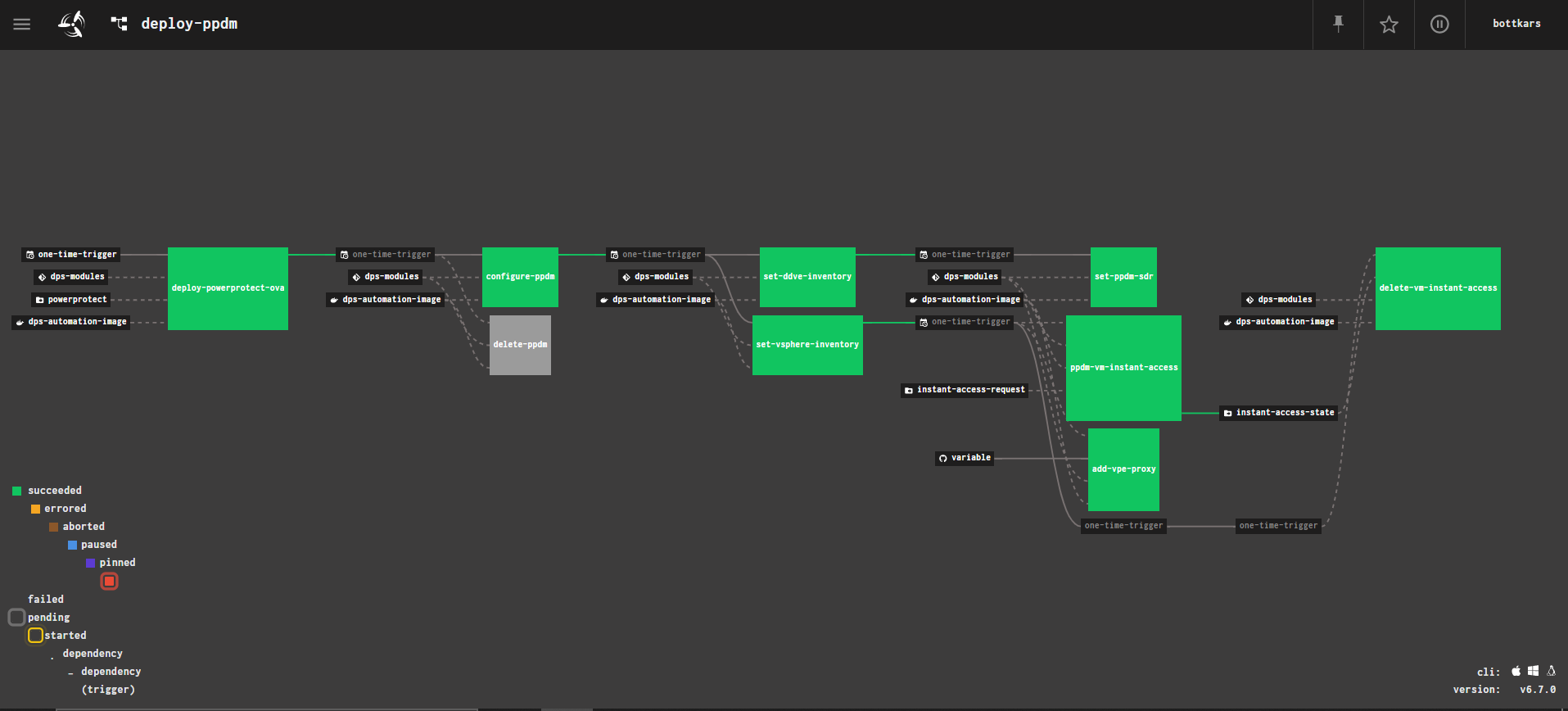

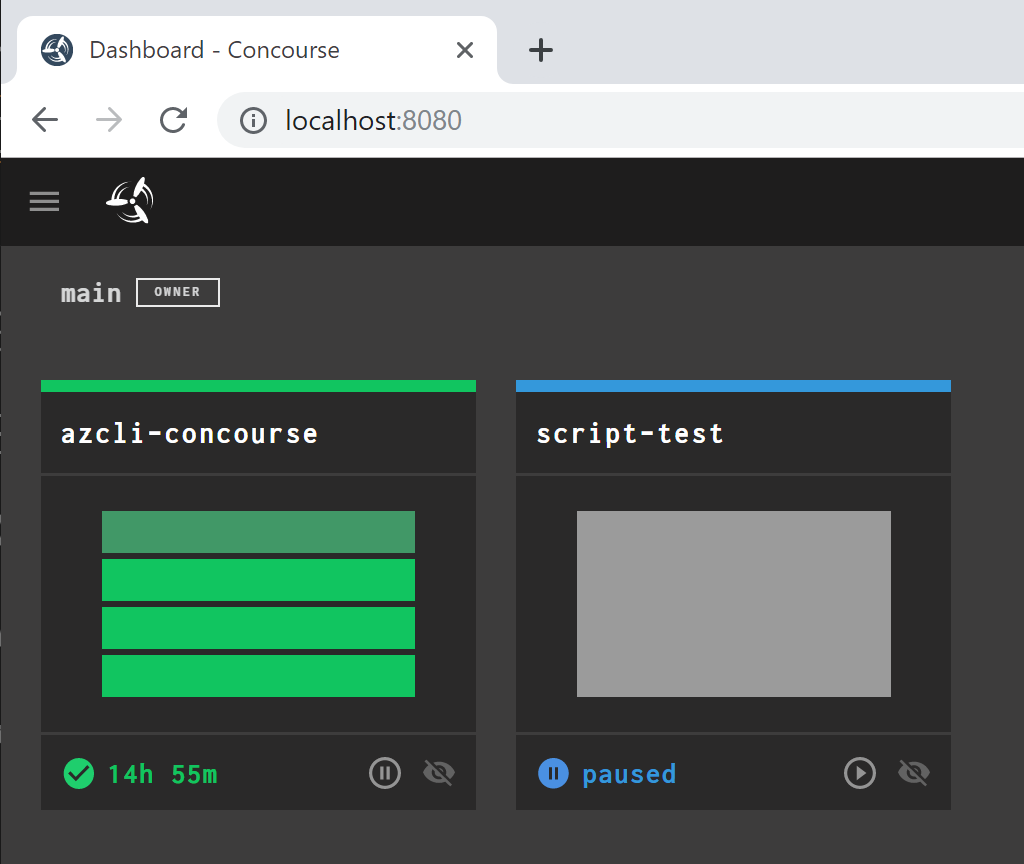

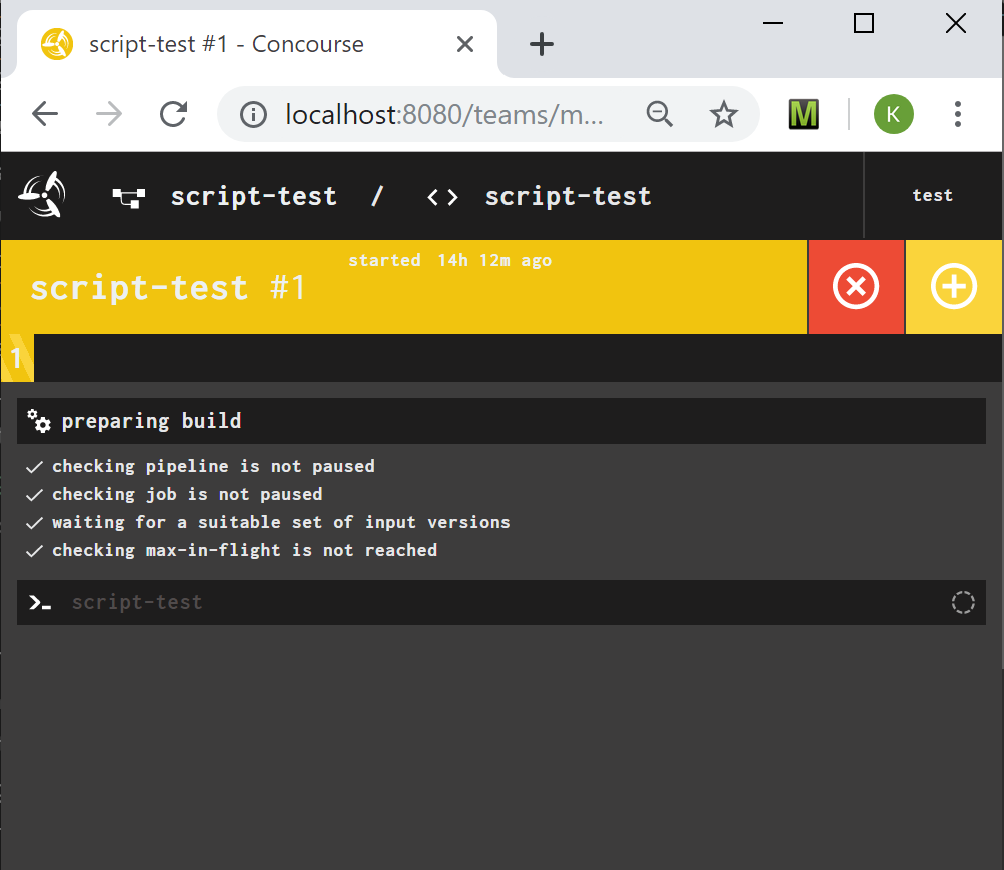

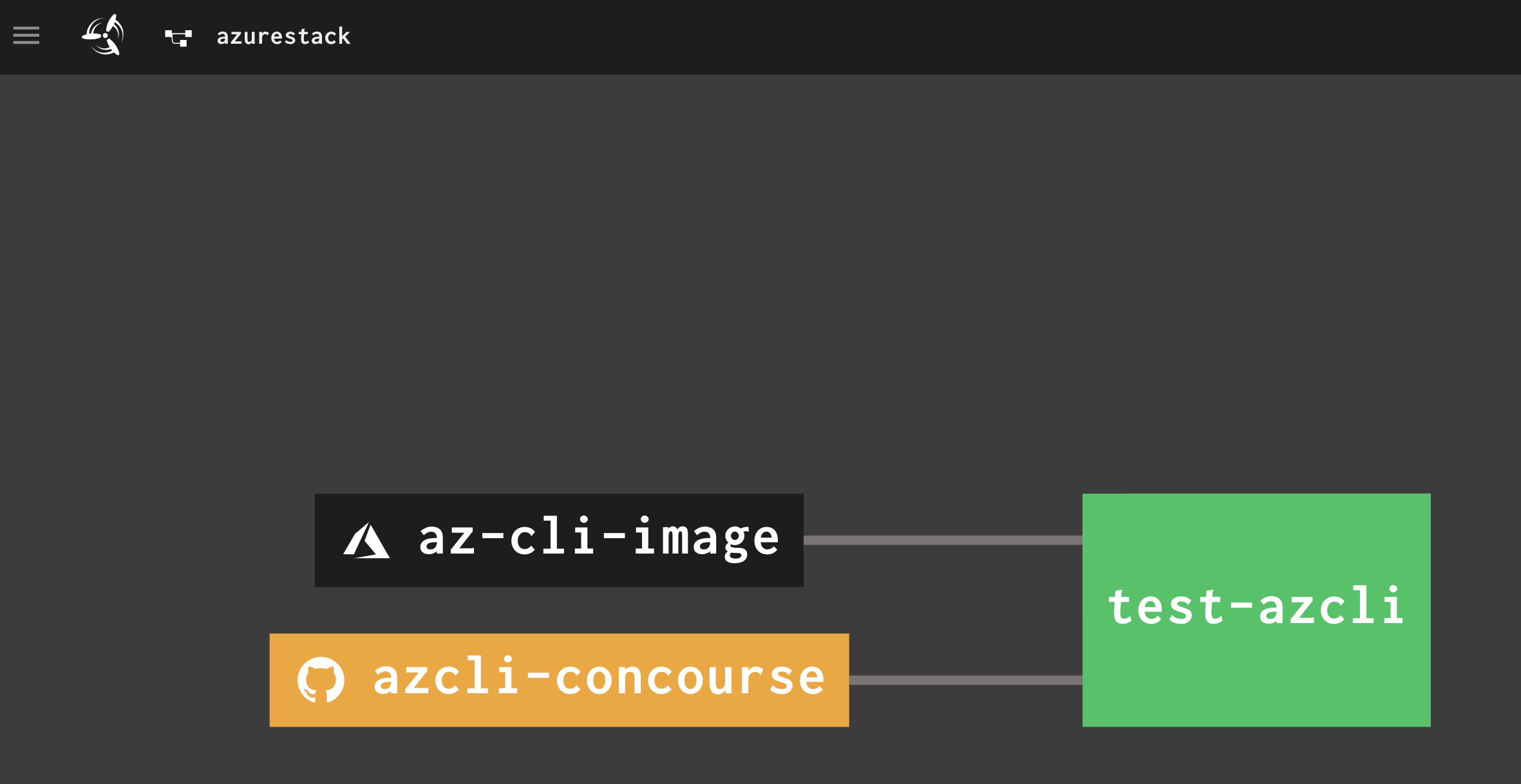

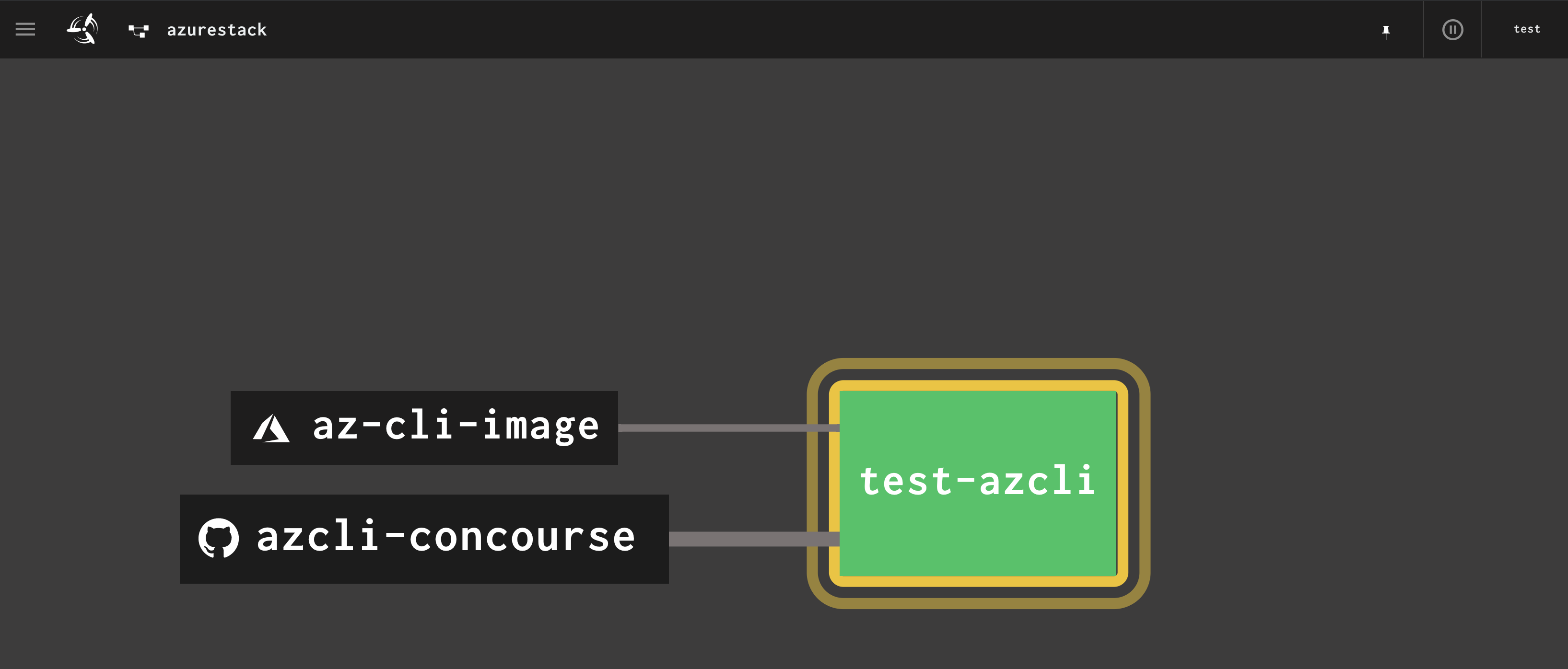

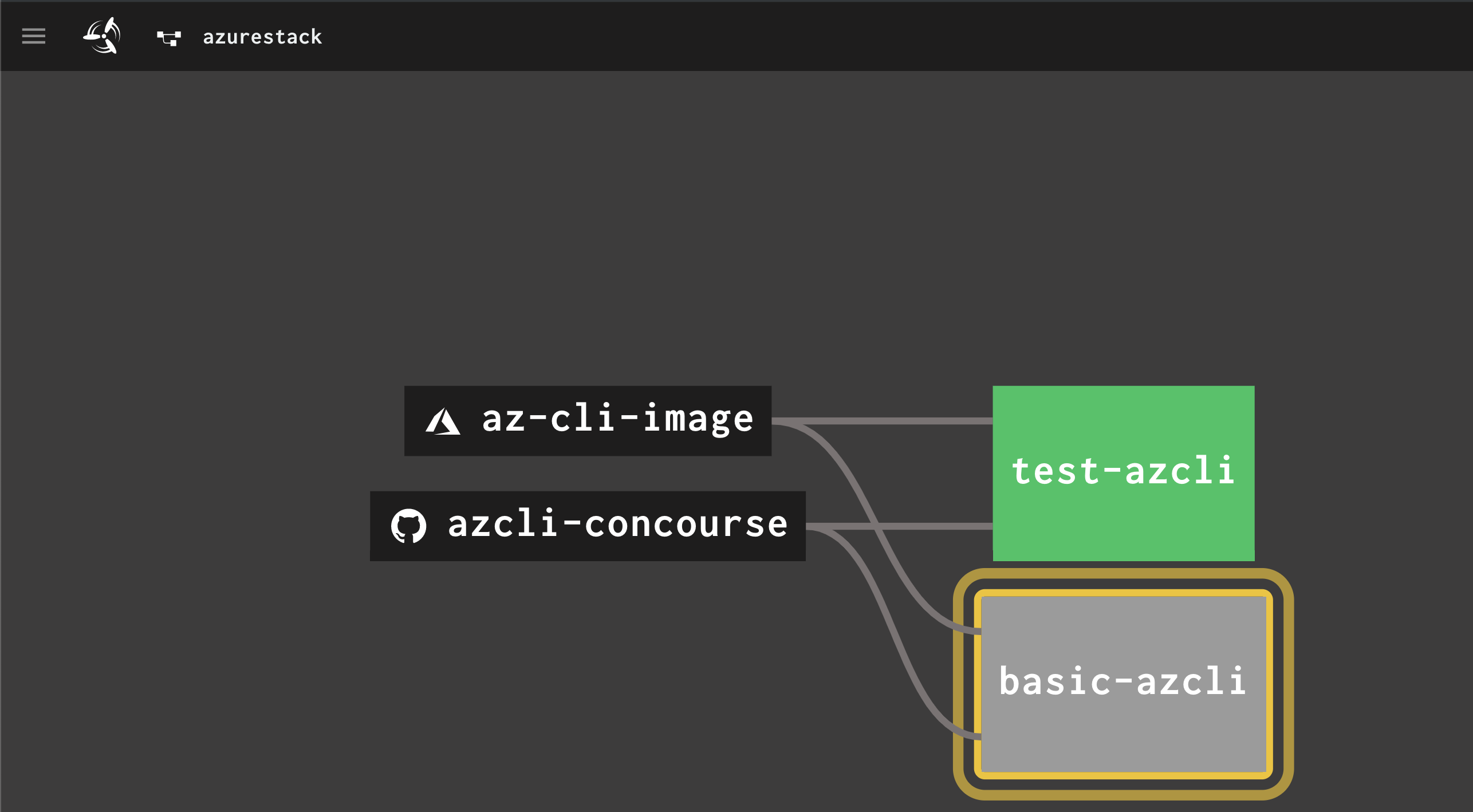

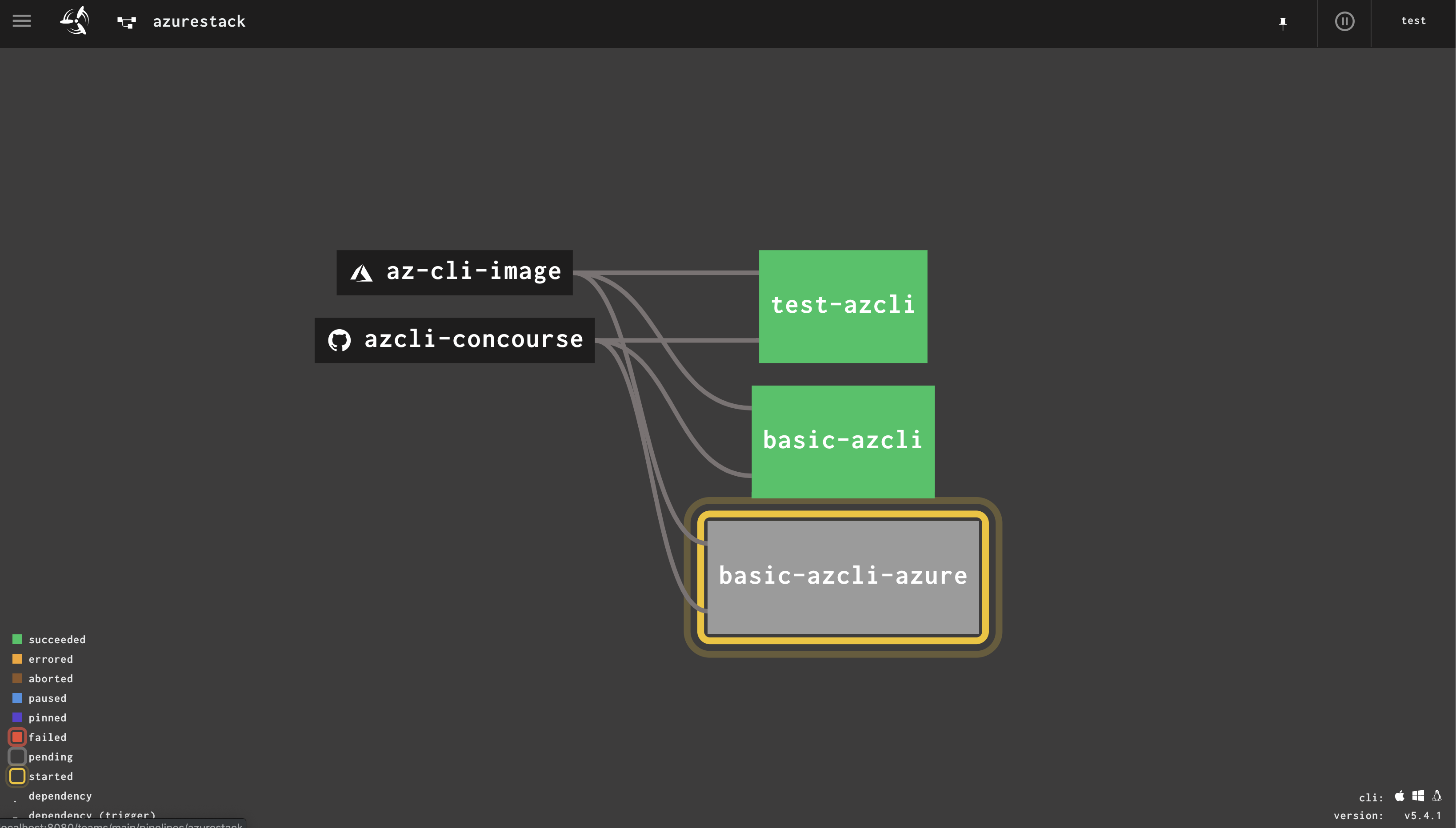

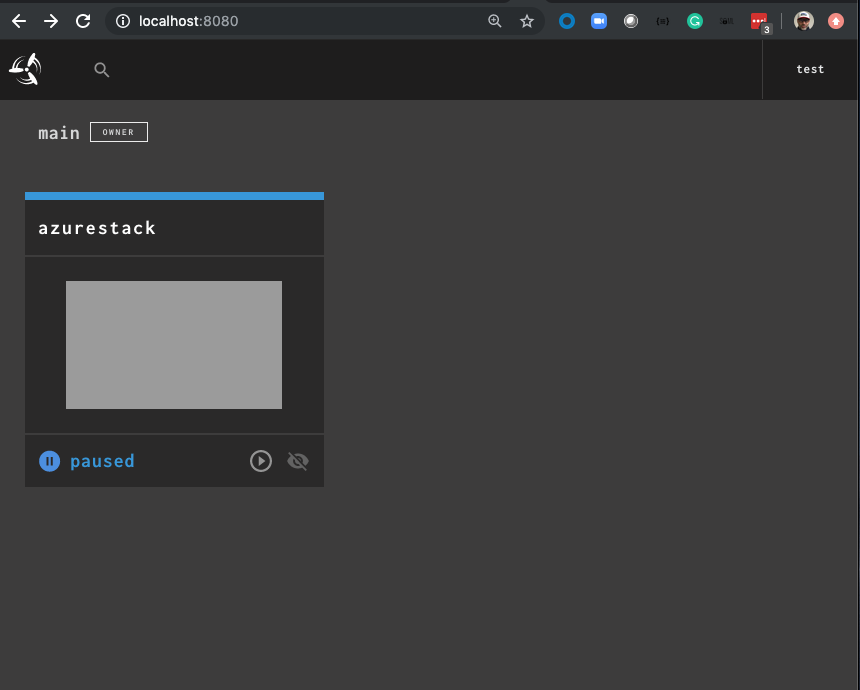

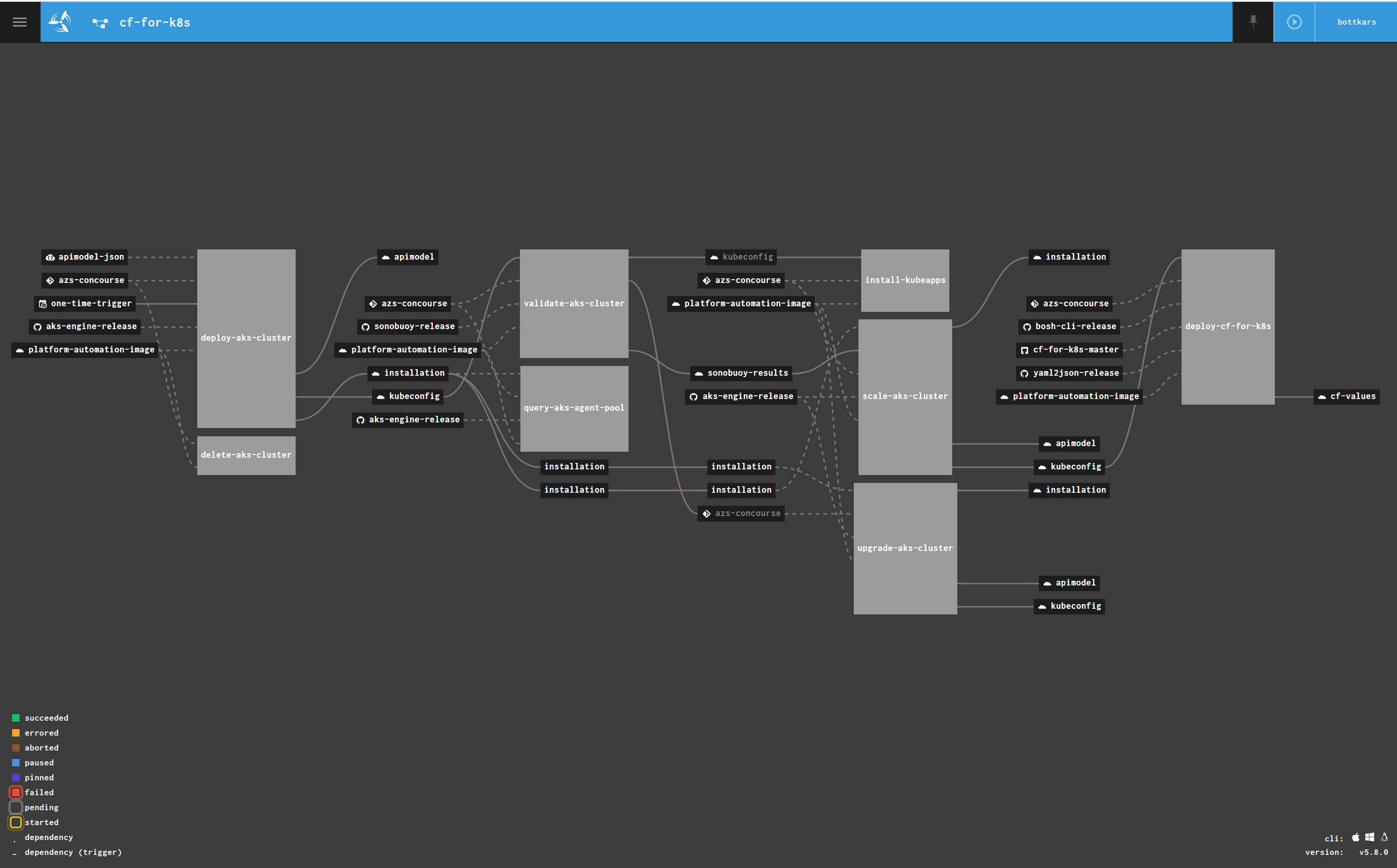

we should now have a Paused cf-for-k8s Pipeline in our UI :

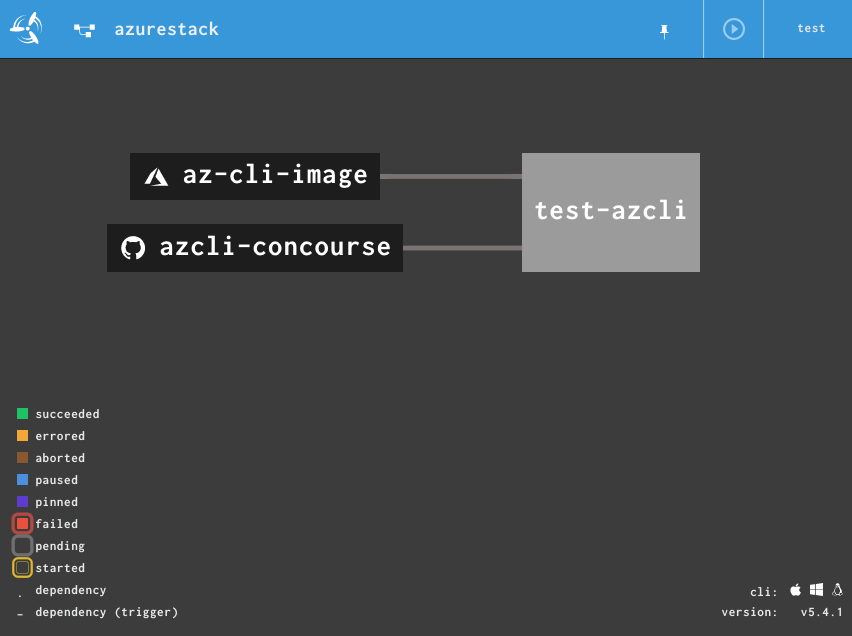

the Pipeline has the folllowing Task Flow:

- deploy-aks-cluster ( c.a. 12 Minutes)

- validate-aks-cluster ( sonobuoy basic validation, ca. 5 minutes)

- install kubeapps ( default in my clusters, bitnami catalog for HELM Repo´s)

- scale-aks-clusters ( for cf-for-k8s, we go to add some more nodes :-) )

- deploy-cf-for-k8s

While the first 4 Tasks are default for my AKS Deployments, we will focus on the deploy-cf-for-k8s task ( note i will write about the AKS Engine Pipeline soon !)

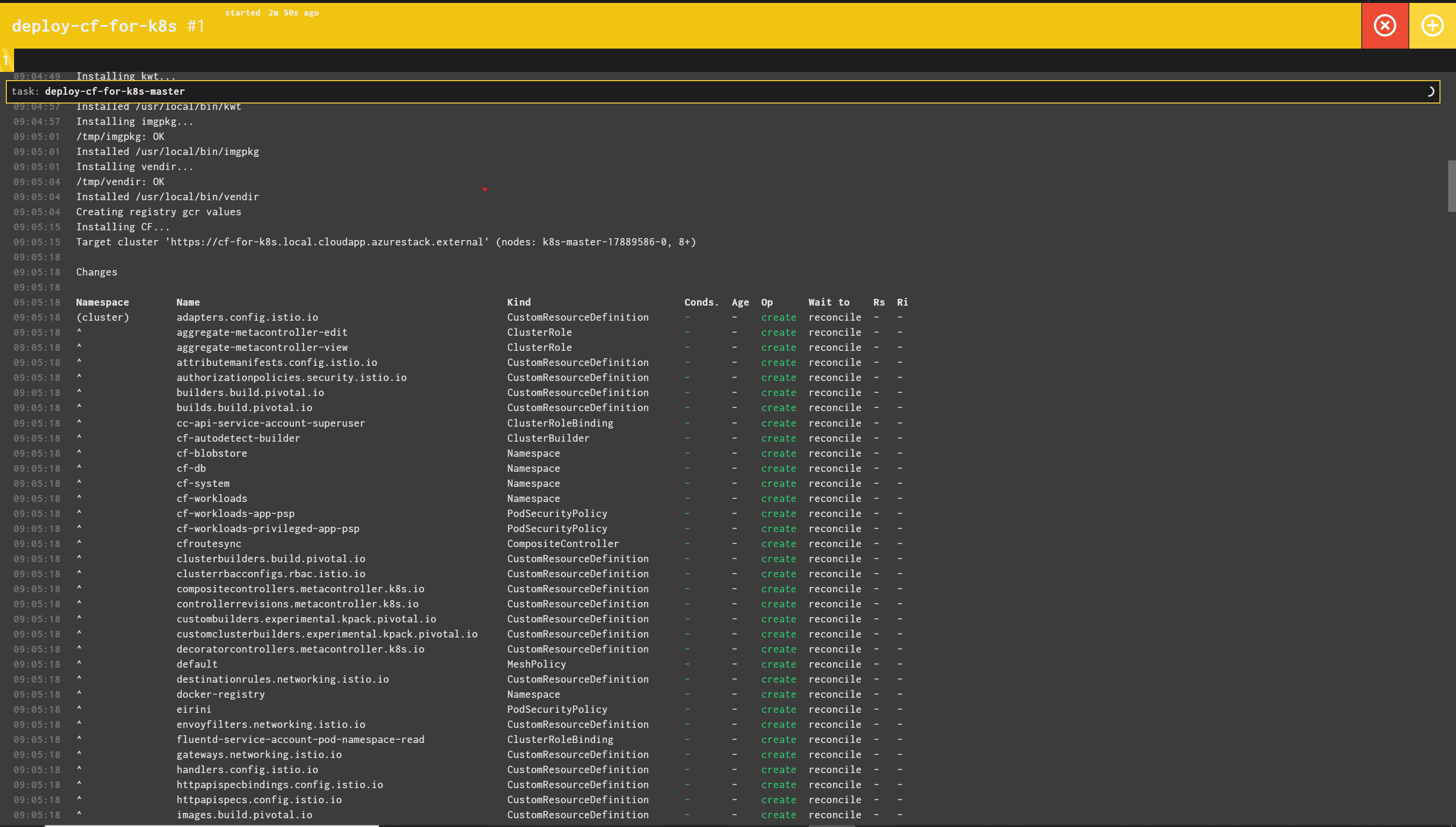

deploy-cf-for-k8s

the deploy-cf-for-k8s task requires the following resources from either github or local storage:

- azs-concourse (required tasks scripts)

- bosh-cli-release ( latest version of bosh cli)

- cf-for-k8s-master ( cf-for-k8smaster branch)

- yml2json-release ( yml to json converter)

- platform-automation-image (base image to run scripts)

also, the following variables need to be passed:

<<: *azure_env # youre azure-stack enfironment

DNS_DOMAIN: ((cf_k8s_domain)) # the cf domain

GCR_CRED: ((gcr_cred)) # credentials for gcr

where GCR_CRED contains the credentials to you Google Container Registry. You can provide them either form a secure store like credhub ( preferred way ), therefore simply load the credtials JSON file obtained from creating the secret with:

credhub set -n /concourse/<main or team>/gcr_cred -t json -v "$(cat ../aks/your-project-storage-creds.json)"

or load the variable to the pipeline from a YAML file in this example, gcr.yaml):

gcr_cred:

type: service_account

project_id: your-project_id

private_key_id: your-private_key_id

private_key: your-private_key

client_email: your-client_email

client_id: your-client_id

auth_uri: your-auth_uri

token_uri: your-token_uri

auth_provider_x509_cert_url: your-auth_provider_x509_cert_url

client_x509_cert_url: your-auth_uri

and then

fly -t concourse_target set-pipeline -c ${AKS_PIPELINE} \

-l ${PLATFORM_VARS} \

-l gcr.yml \

-l ${AKS_VARS} \

-p ${AKS_CLUSTER} \

-v cf_k8s_domain=cf.local.azurestack.external

the tasks

tbd

Monitoring the installation

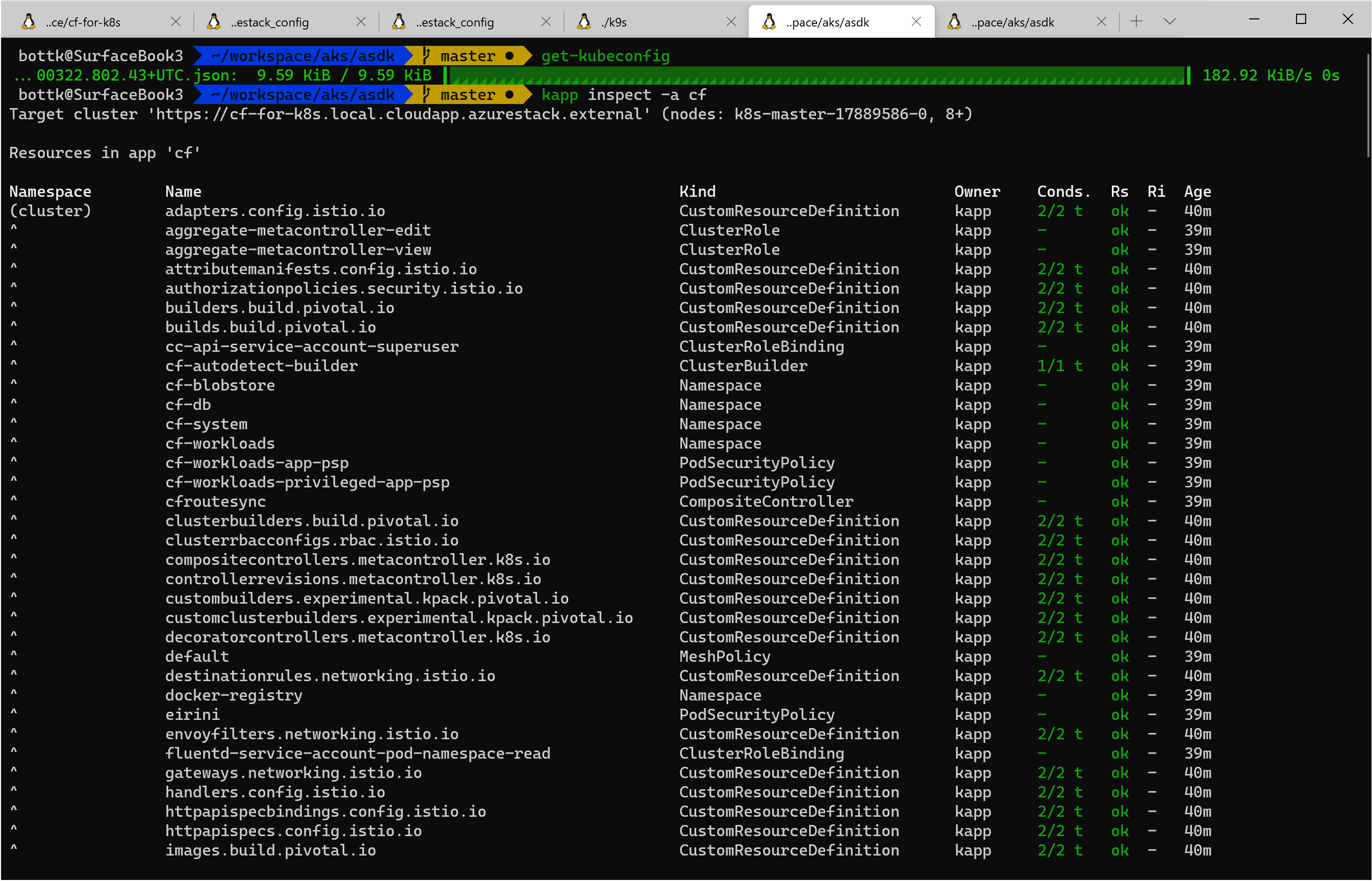

cf-for-k8s will be deployed using k14tools for an easy composable deployment. the pipeline does that during the install

the pipeline may succeed, with cf-for-k8s not finished deploying. the deployment time varies on multiple factors including internet speed. however, the kapp deployment may still be ongoing when the pipeline is finished.

to monitor the deployment on your machine, you can install k14tools on your machine following the instructions on their site. kapp requires a kubeconfig file to access your cluster. copy you kubeconfig file ( the deploy-aks task stores that on your s3 store after deployment)

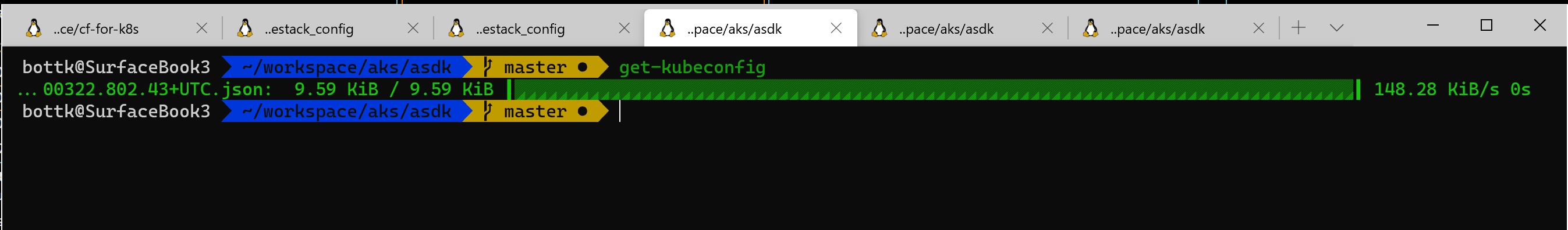

i have an alias that copies my latest kubeconfig file:

get-kubeconfig

to monitor / inspect the deployment, run

kapp inspect -a cf

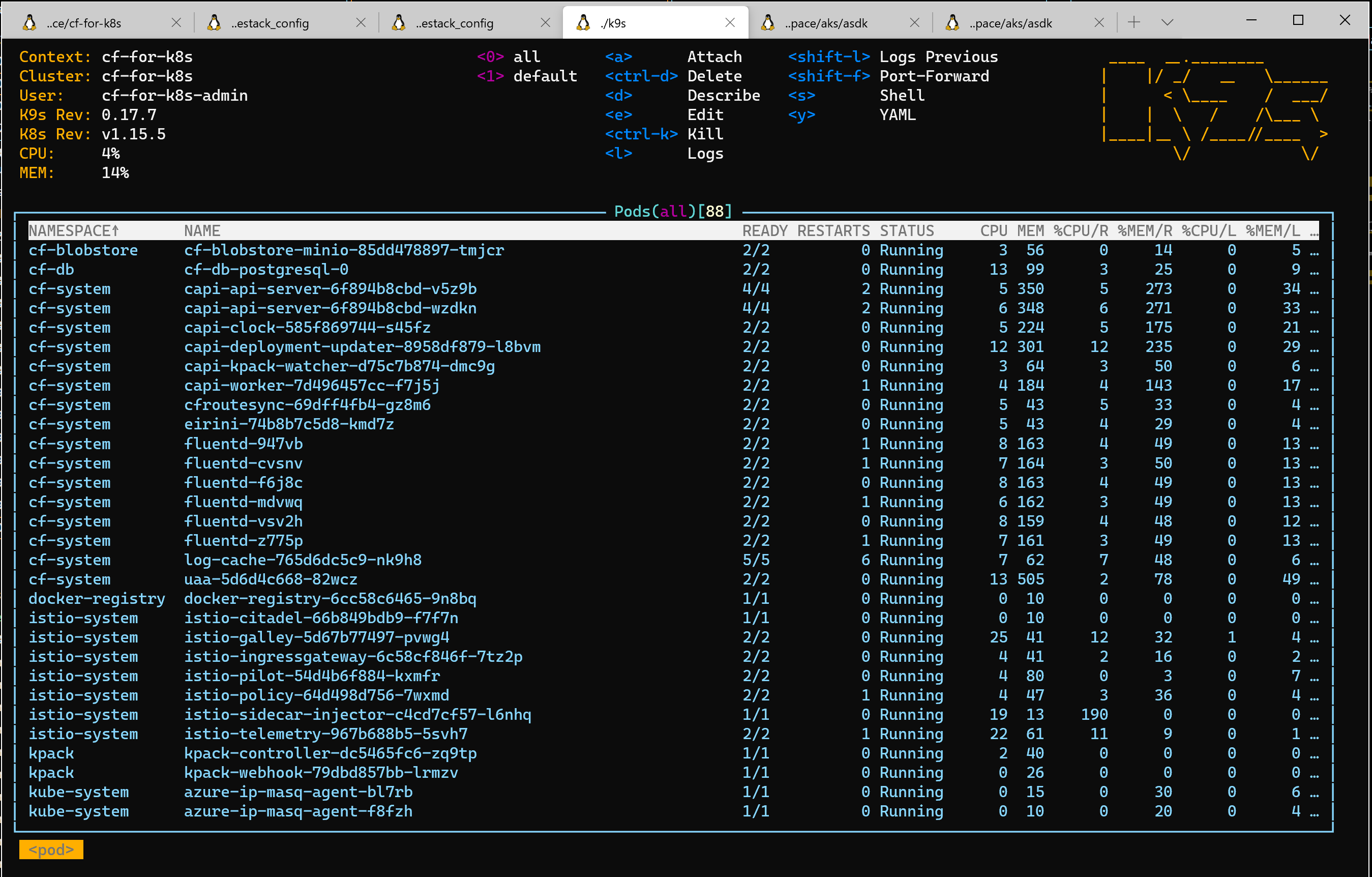

in short, oince all pods in namespace cf-system are running, the system should be ready ( be aware, as there are daily changes, a deployment might fail)

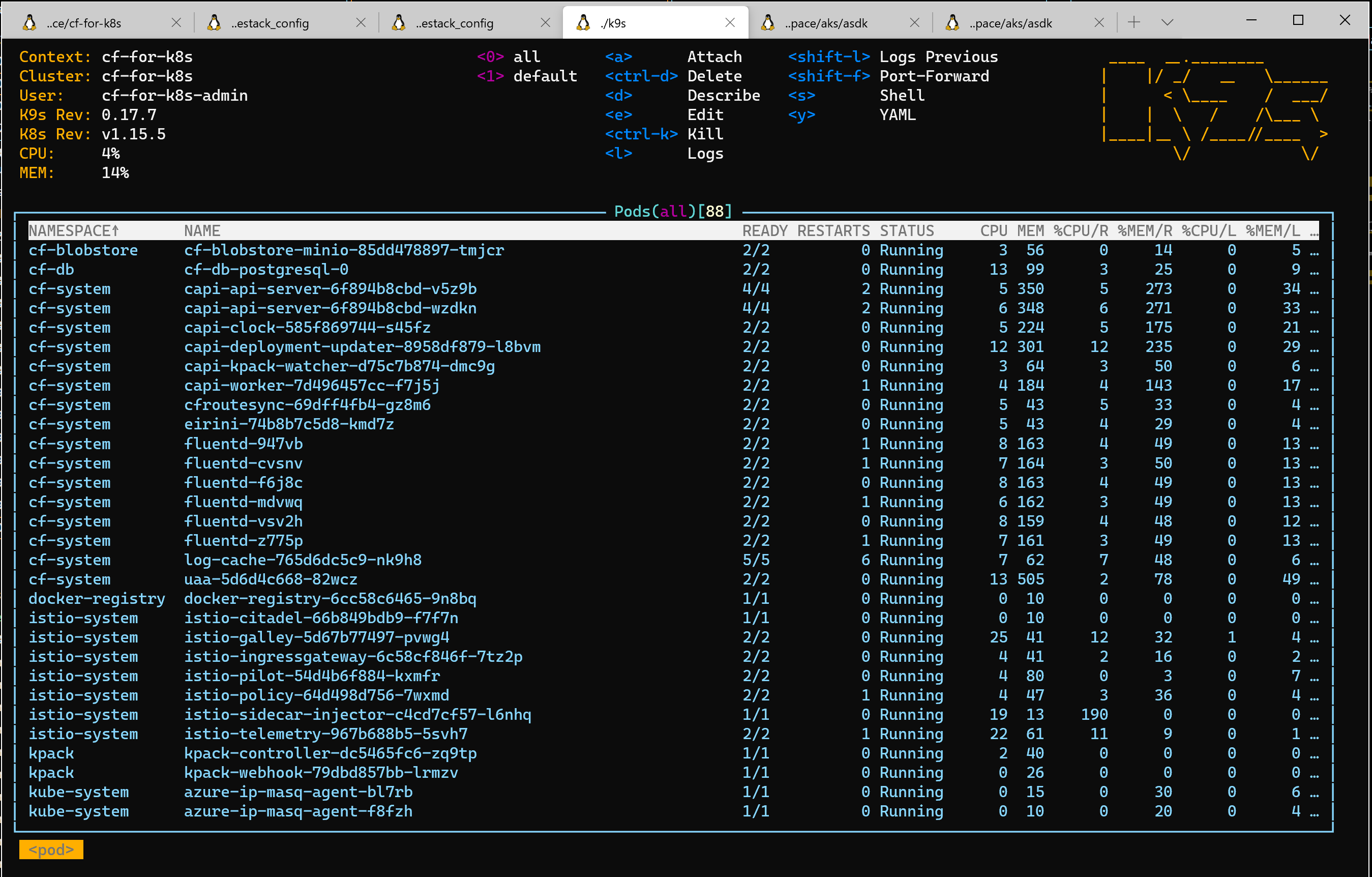

k9s can give you a great overview of the running pods

connect to you cloudfoundry environment

cf-for-k8s depoloy´s a Service Type Loadbalancer per default. the Pipeline create a dns a record for the cf domain you specified for the pipeline.

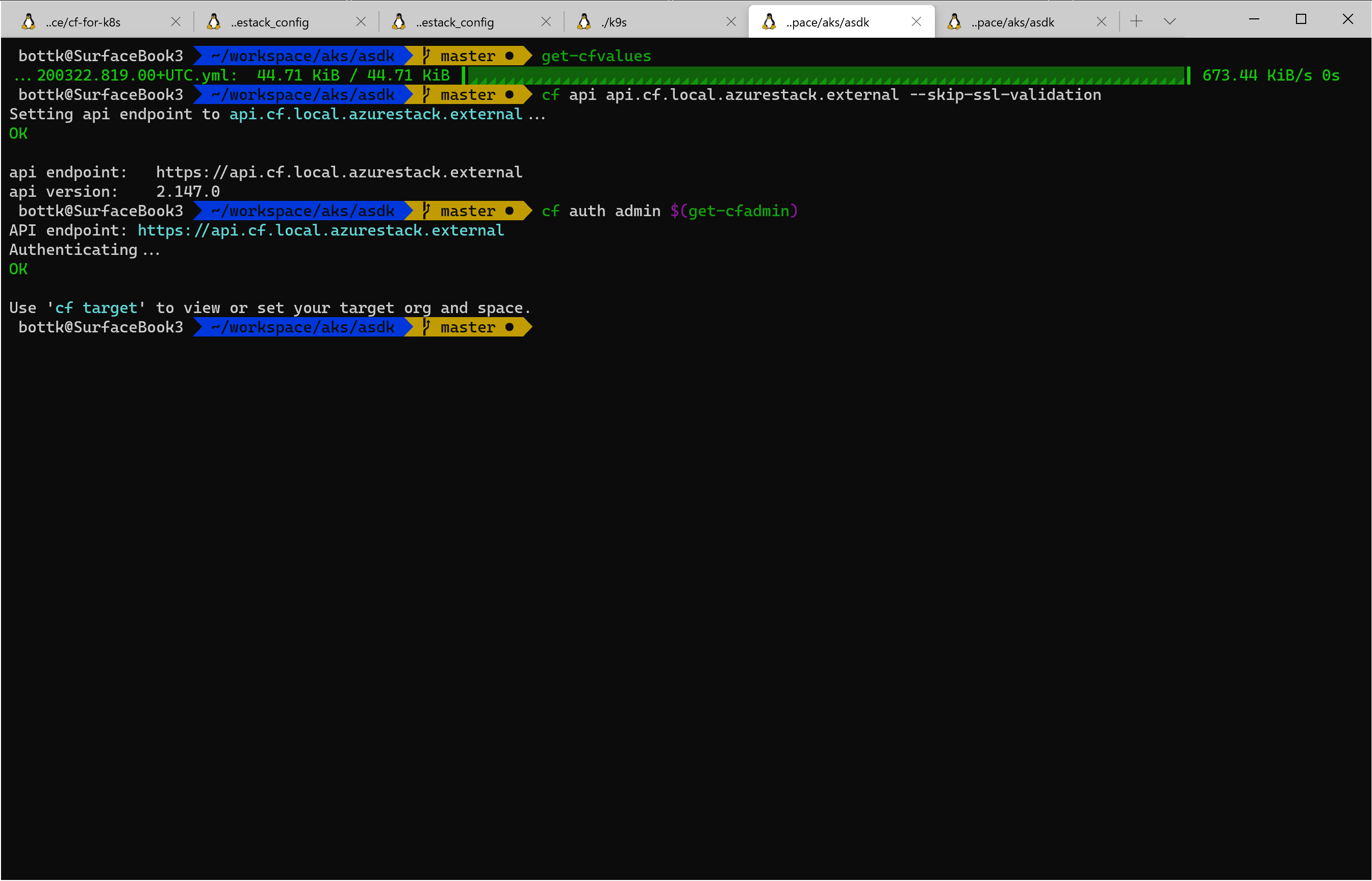

the admin´s password is autogenerated and stored in the cf-yalues.yml that get stored on your s3 location. in my (direnv) environment, i receive the firl / the credentials with

get-cfvalues

get-cfadmin

connecting to cf api and logging in

get-cfvalues

cf api api.cf.local.azurestack.external --skip-ssl-validation

cf auth admin $(get-cfadmin)

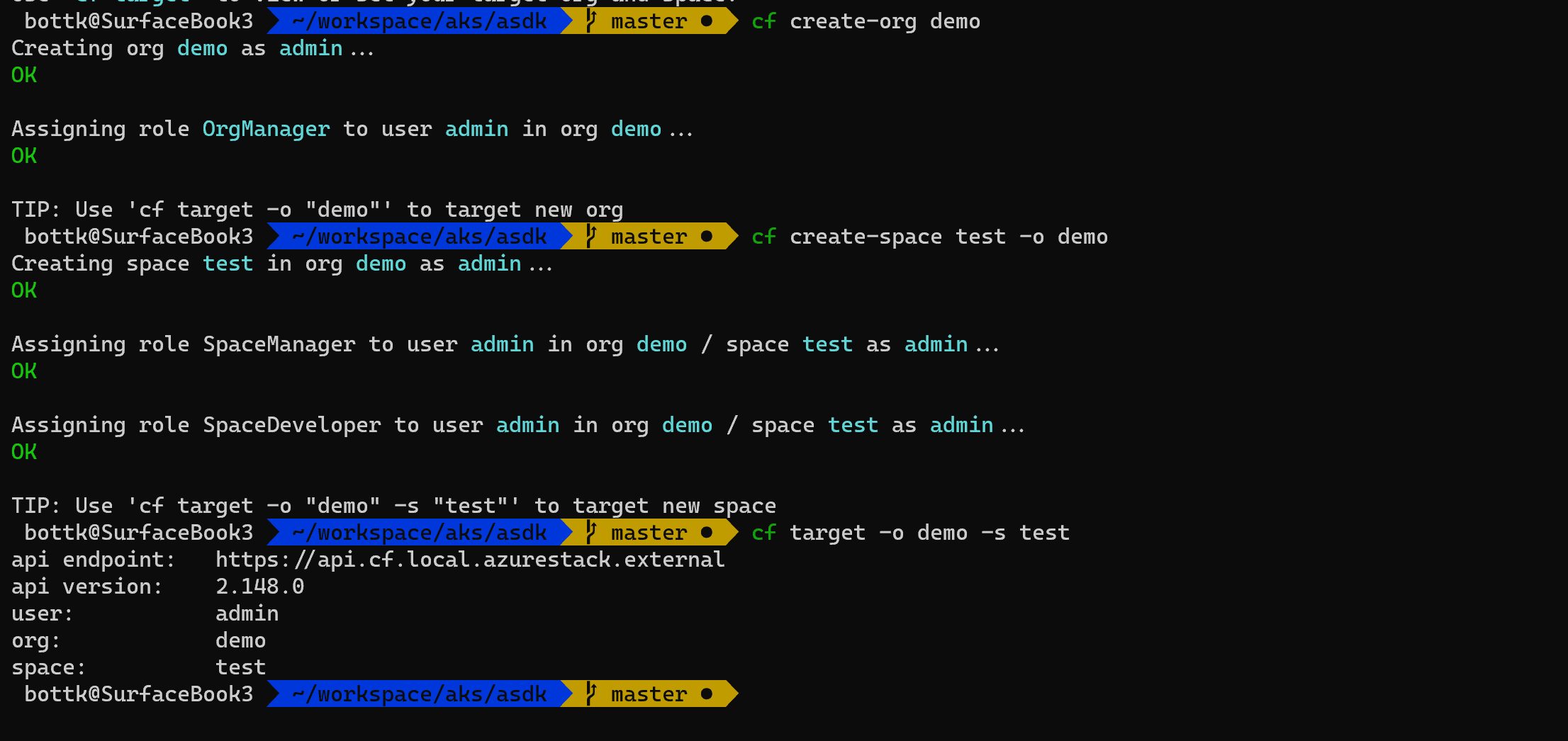

there are no orgs and spaces defined per default, so we are going to create:

cf create-org demo

cf create-space test -o demo

cf target -o demo -s test

push a docker container

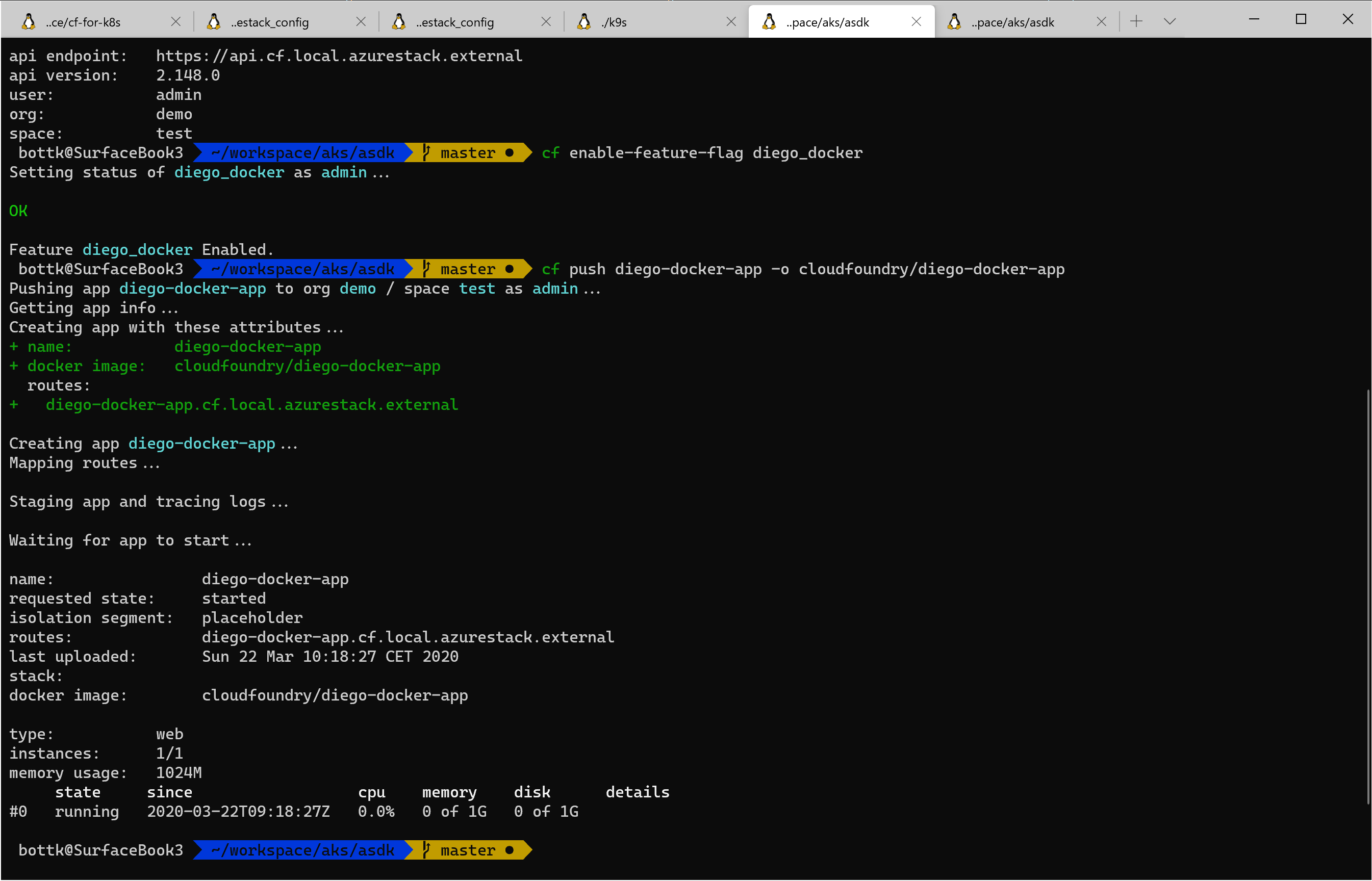

to run docker containers in cloudfoundry, you also have to

cf enable-feature-flag diego_docker

now it is time deploy our first docker container to cf-for-k8s

push the diego-docker-app from the cloudfoundry project on dockerhub

cf push diego-docker-app -o cloudfoundry/diego-docker-app

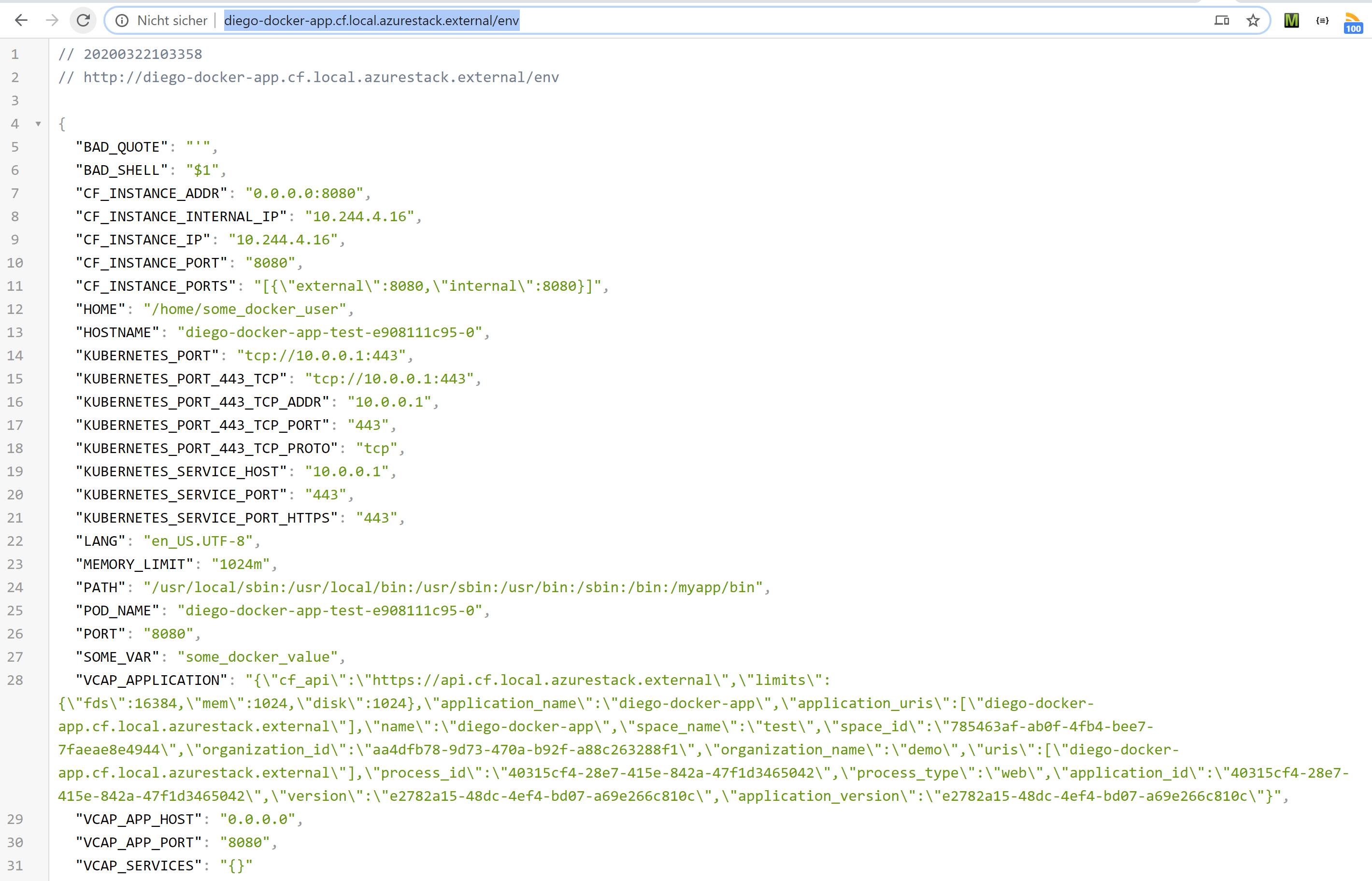

we can now browse the endpoint og the demo app http://diego-docker-app.cf.local.azurestack.external/env

or use curl:

curl http://diego-docker-app.cf.local.azurestack.external/env

pushing an app from source

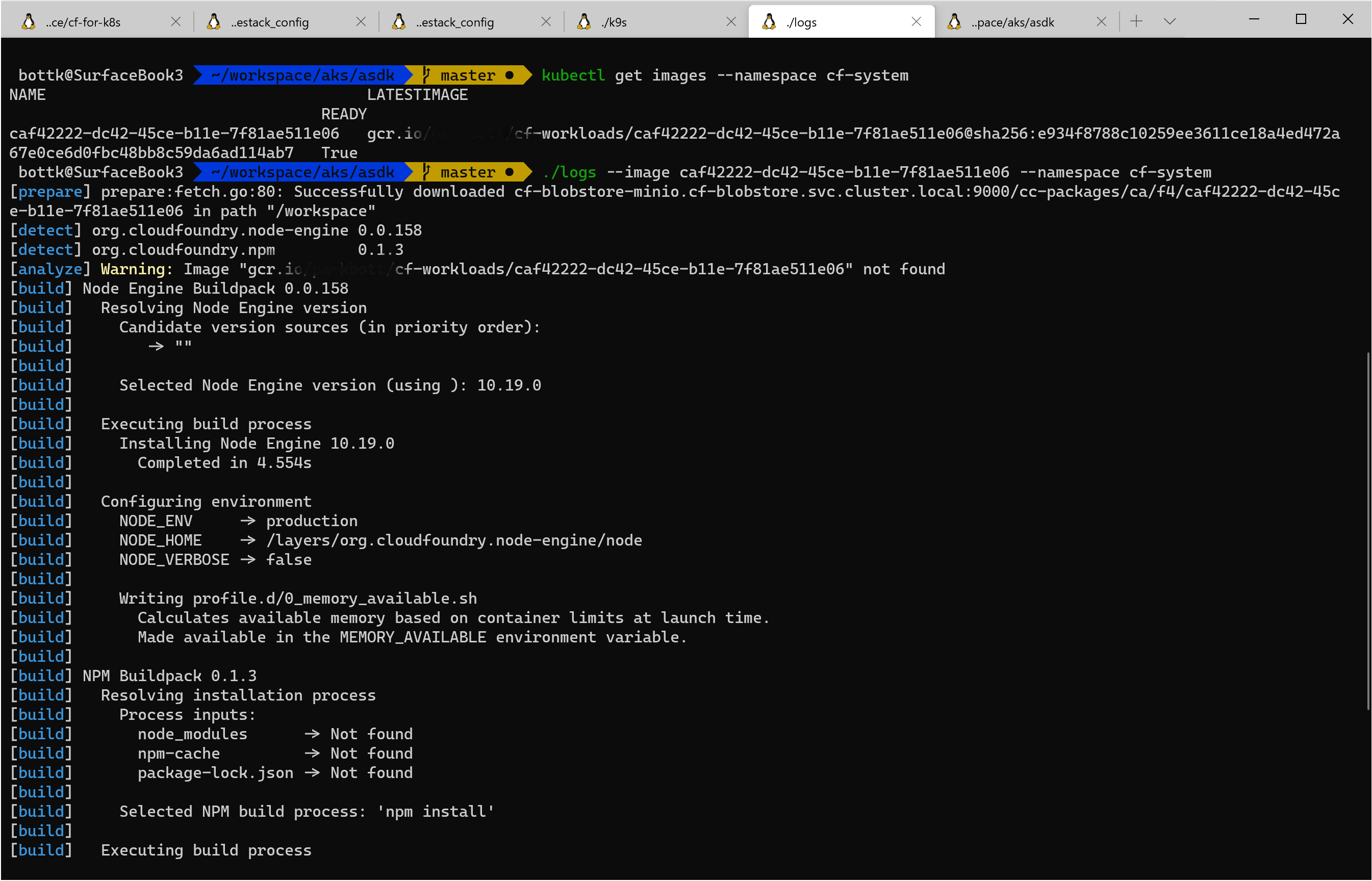

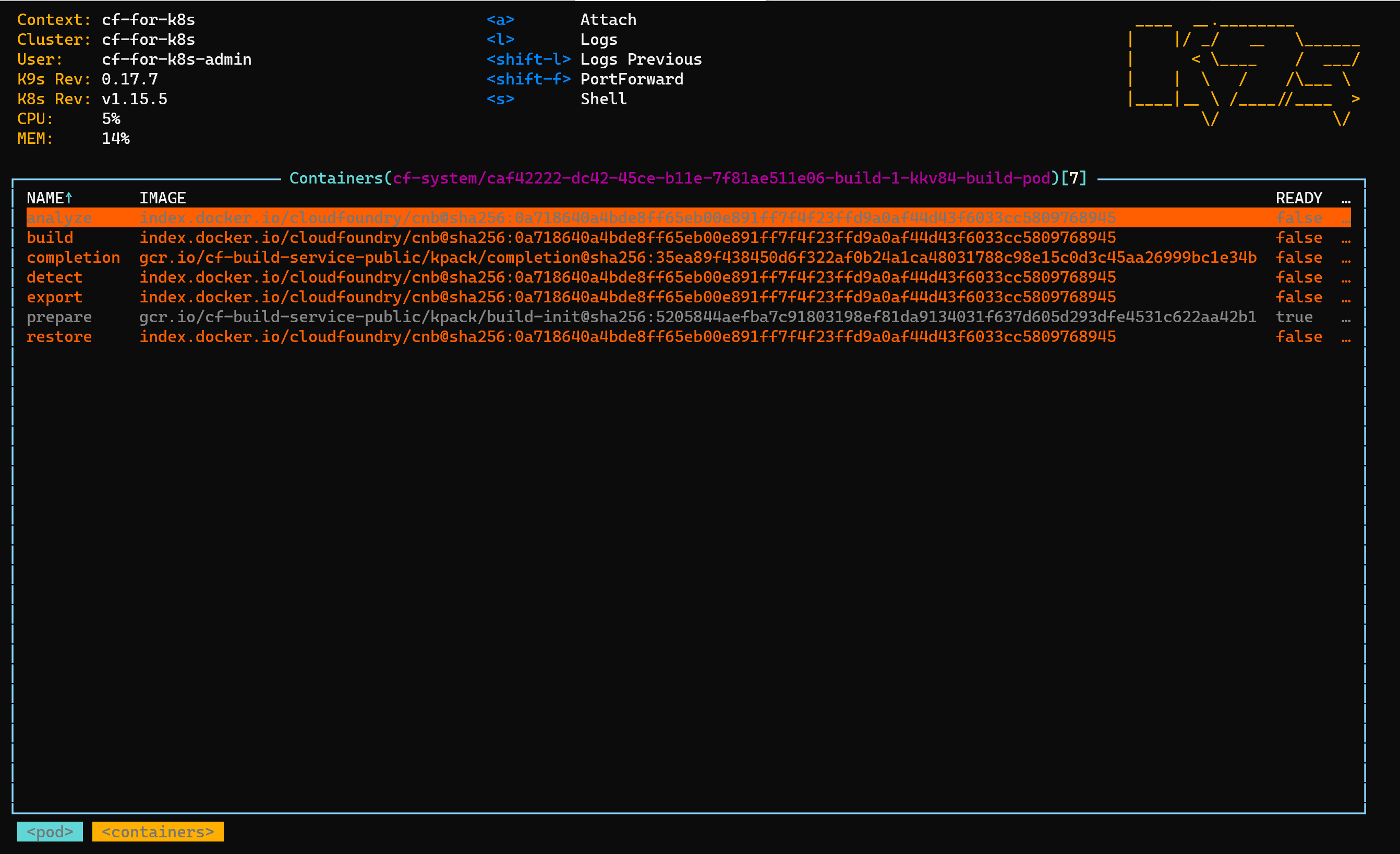

cf-for-k8s utilizes cloudnative buildpacks using kpack In essence, a watcher it running in the kpack namespace to monitor new build request from cloudcontroller. a “build pod” will run the build process of detecting, analyzing building and exportin the image

once a new request is detected, the clusterbuilder will create an image an ship it to the (gcr) registry. from there

you can always view and monitor the image builder process by viewing the image and use the log_tail utility to view the builder logs:

kubectl get images --namespace cf-system

logs --image caf42222-dc42-45ce-b11e-7f81ae511e06 --namespace cf-system