Create Customized POD Configs for PPDM Pod´s

Disclaimer: use this at your own Risk

why that ?

The PowerProtect DataManager Inventory Source uses Standardized Configurations and Configmaps for the Pods deployed by PPDM, e.gh. cProxy, PowerProtect Controller, as well as Velero.

In this Post, i will use an example to deploy the cProxies to dedicated nodes using Node Affinity. This makes Perfect sense if you want to separate Backup from Production Nodes.

More Examples could include using cni plugins like multus, dns configurations etc.

The Method described here is available from PPDM 19.10 and will be surfaced to the UI in Future Versions

1. what we need

The below examples must be run from a bash shell. We will use jq to modify json Documents.

2. Adding labels to worker nodes

in order to use Node Affinity for our Pods, we first need to label nodes for dedicated usage.

In this Example we label a node with tier=backup

A corresponding Pod Configuration example would look like:

apiVersion: apps/v1

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: tier

operator: In # tag must be *in* tags

values:

- backup

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresentWe will use a customized template section later for our cProxy

So first, tag the Node(s) you want to use for Backup:

kubectl label nodes ocpcluster1-ntsgq-worker-local-2xl2z tier=backup2. Create a Configuration Patch for the cProxy

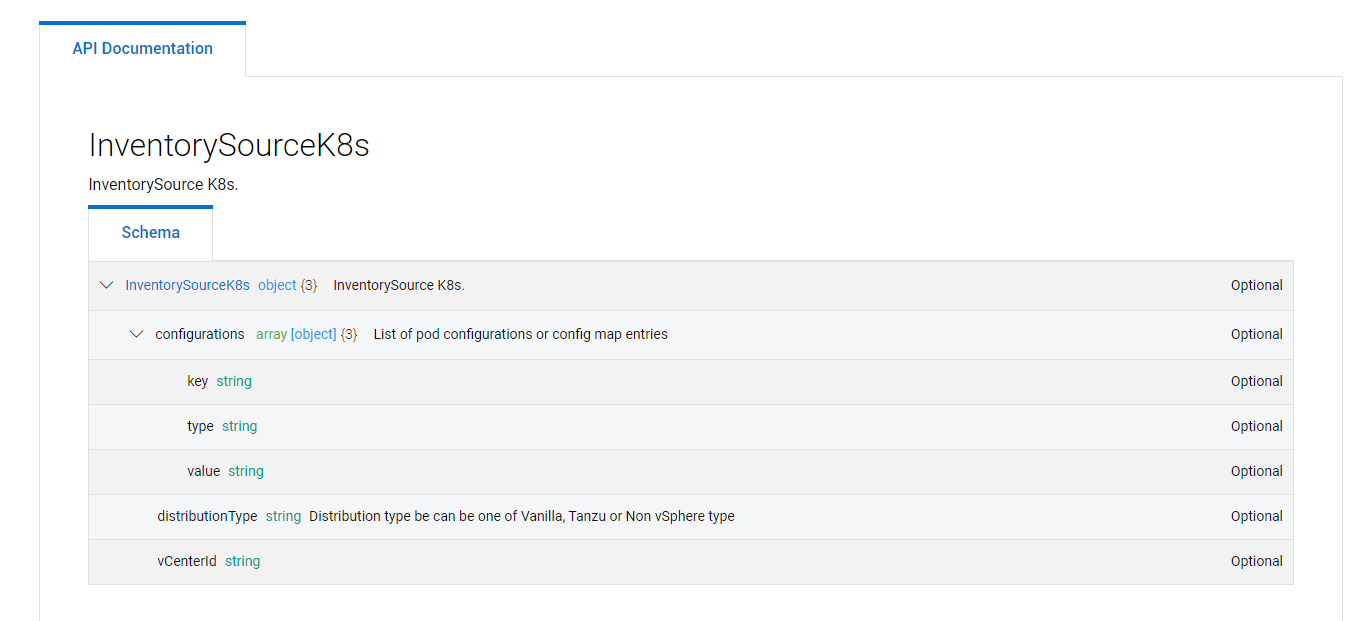

We create manifest Patch for the cProxy from a yaml Document. This will be base64 encoded and presented as a Value to POD_CONFIG type to our Inventory Source The API Reference describes the Format of the Configuration.

The CPROXY_CONFIG variable below will contain the base64 Document

CPROXY_CONFIG=$(base64 -w0 <<EOF

---

metadata:

labels:

app: cproxy

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: tier

operator: In

values:

- backup

EOF

) 3. Patching the inventory Source using the PowerProtect Datamanager API

You might want to review the PowerProtect Datamanager API Documentation

for the Following Commands, we will leverage some BASH Variables to specify your environment:

PPDM_SERVER=<your ppdm fqdn>

PPDM_USERNAME=<your ppdm username>

PPDM_PASSWORD=<your ppdm password>

K8S_ADDRESS=<your k8s api address the cluster is registered with, see UI , Asset Sources -->, Kubernetes--> Address>3.1 Logging in to the API and retrieve the Bearer Token

The below code will read the Bearer Token into the TOKEN variable

TOKEN=$(curl -k --request POST \

--url https://${PPDM_SERVER}:8443/api/v2/login \

--header 'content-type: application/json' \

--data '{"username":"'${PPDM_USERNAME}'","password":"'${PPDM_PASSWORD}'"}' | jq -r .access_token)3.2 Select the Inventory Source ID based on the Asset Source Address

Select inventory ID matching your Asset Source Address :

K8S_INVENTORY_ID=$(curl -k --request GET https://${PPDM_SERVER}:8443/api/v2/inventory-sources \

--header "Content-Type: application/json" \

--header "Authorization: Bearer ${TOKEN}" \

| jq --arg k8saddress "${K8S_ADDRESS}" '[.content[] | select(.address==$k8saddress)]| .[].id' -r)3.3 Read Inventory Source into Variable

With the K8S_INVENTORY_ID from above, we read the Inventory Source JSON Document into a Variable

INVENTORY_SOURCE=$(curl -k --request GET https://${PPDM_SERVER}:8443/api/v2/inventory-sources/$K8S_INVENTORY_ID \

--header "Content-Type: application/json" \

--header "Authorization: Bearer ${TOKEN}")3.4 Adding the Patched cProxy Config to the Variable

Using jq, we will modify the Inventory Source JSON Document to include our base64 cproxy Config.

for that, we will add an list with content

"configurations": [

{

"type": "POD_CONFIG",

"key": "CPROXY",

"value": "someBase64Document"

}

]Other POD_CONFIGS key´s we could modify are POWERPROTECT_CONTROLLER and VELERO the below json code will take care for this:

INVENTORY_SOURCE=$(echo $INVENTORY_SOURCE| \

jq --arg cproxyConfig "${CPROXY_CONFIG}" '.details.k8s.configurations += [{"type": "POD_CONFIG","key": "CPROXY", "value": $cproxyConfig}]')3.4 Patching the Inventory Source in PPDM

We now use a POST request to upload the Patched inventory Source Document to PPDM

curl -k -X PUT https://${PPDM_SERVER}:8443/api/v2/inventory-sources/$K8S_INVENTORY_ID \

--header "Content-Type: application/json" \

--header "Authorization: Bearer $TOKEN" \

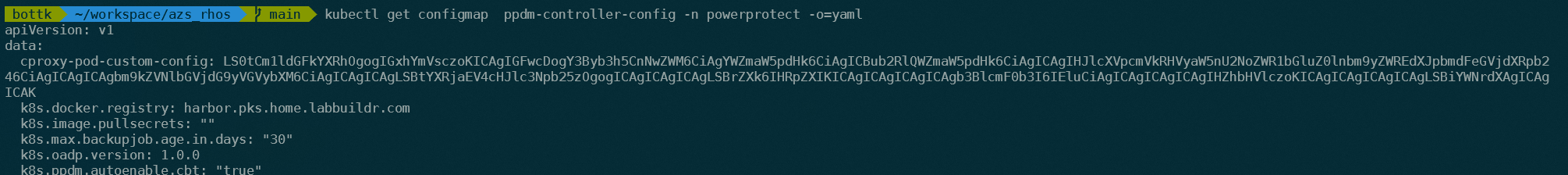

-d "$INVENTORY_SOURCE"We can verify the patch by checking the PowerProtect Configmap in the PPDM Namespace.

kubectl get configmap ppdm-controller-config -n powerprotect -o=yamlThe configmap must now contain cproxy-pod-custom-config in data

The next Backup job will now use the tagged node(s) for Backup !

3.5 Start a Backup and verify the node affinity for cproxy pod

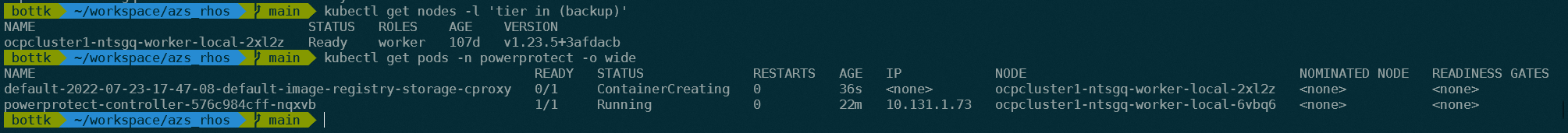

First, identify the node you labeled

kubectl get nodes -l 'tier in (backup)'once the backup job creates the cproxy, this should be done on one of the identified nodes:

kubectl get pods -n powerprotect -o wide

This concludes the Patching of cproxy Configuration, stay tuned for more